Researchers from Google AI have found a way to systematically scale deep neural networks and improve accuracy as well as efficiency.

Deep neural networks have shown unprecedented performance on a broad range of problems coming from a variety of different fields. It has been shown in the past that performance in terms of accuracy grows together with the amounts of data but also the depth of the networks.

Increasing the number of layers has proven to increase accuracy and researchers have been building larger and larger models in the past years.

In their paper “EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks”, researchers from Google argue that the scaling of deep neural networks should not be done arbitrarily and proposed a method for structured scaling of deep networks.

They proposed an approach where different dimensions of the model can be scaled in a different manner using scaling coefficients.

To obtain those scaling coefficients, they perform a grid search to find the relationship between those scaling dimensions and how they affect the overall performance. Then, they apply those scaling coefficients to a baseline network model to achieve maximum performance given a (computational) constraint.

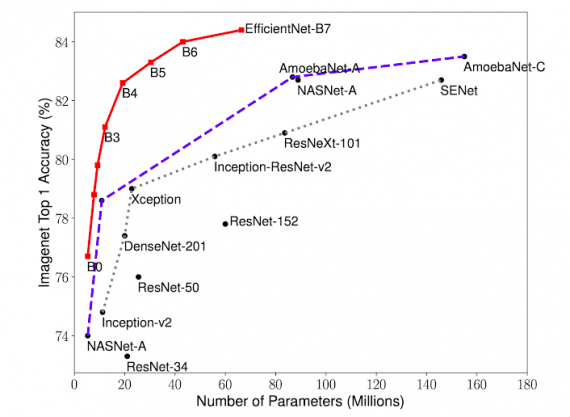

Using this approach, researchers developed a family of models, called EfficientNets, which surpass state-of-the-art accuracy with up to 10x better efficiency.

More about the proposed scaling method and the family of models called EfficientNets can be read in the official blog post. The paper was accepted as a conference paper in ICML 2019 and is available on arxiv.