Researchers from Facebook AI Research have open-sourced CCMatrix – a billion-scale text dataset for training translational models.

In their blog post, researchers shared details on the dataset, how it was collected and how it can be used to foster research in neural machine translation. The new dataset is in fact, the largest, high-quality dataset for training translational systems containing more than 4.5 billion sentences in 576 languages. In order to collect a dataset of such size, researchers and engineers from Facebook AI Research have used massively parallel processing to query Common Crawl – a large web archive consisting of petabytes of crawled data from the web. They designed a parallelization scheme that efficiently checks the distance between all sentence embeddings in one batch, that allows extracting sentence pairs from different languages.

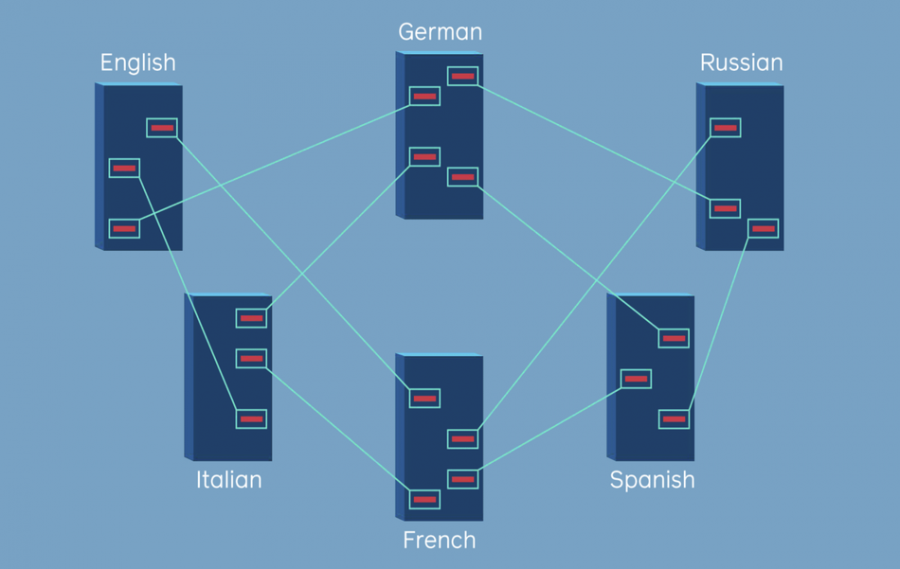

To showcase the contribution of this kind of billion-scale text dataset, researchers trained neural machine translation (NMT) models using the freshly collected CCMatrix dataset. The resulting models outperformed current state-of-the-art methods in the task of neural machine translation in four language directions.

The new dataset is expected to help researchers in developing better NMT models, especially for languages or language pairs for which there is a limited corpora available.

Instructions on how to download data from CCMatrix are available in the Github repository. More about the data collection method can be read in the blog post.