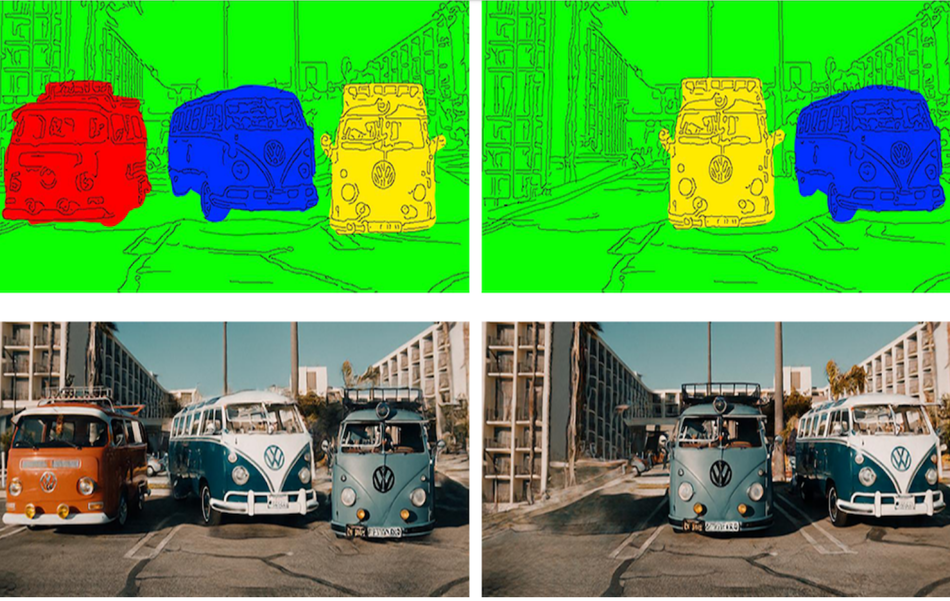

A group of researchers from the Hebrew University of Jerusalem has developed a method that can successfully manipulate an input image based on the manipulated segmentation mask.

The method, named DeepSIM (for Deep Single Image Manipulation) leverages the power of conditional GAN networks to learn an image manipulation technique. Researchers first observed that the key to training single image manipulation networks is extensive augmentation of the input image and they developed a new image augmentation method necessary in order to train their proposed DeepSIM model.

The augmentation method that was proposed, is based on the so-called TPS (thin-plate-spline) warping and it simply divides an input image into a grid, whose cells are later translated (or shifted) by some random distance, resulting in a smooth warp. In the architecture of the new method, researchers decided to define and use a novel “image primitive” that combines both primitives: edge maps and segmentation masks into a different primitive. Using the pix2pix architecture, and the abovementioned changes, a new conditional GAN model was trained to learn the task of image manipulation.

The results on several datasets show that the generator network has successfully learned how to perform image manipulation, given a modified image primitive.

More details about the DeepSIM model can be read in the paper. The implementation of the method was open-sourced and it is available on Github.