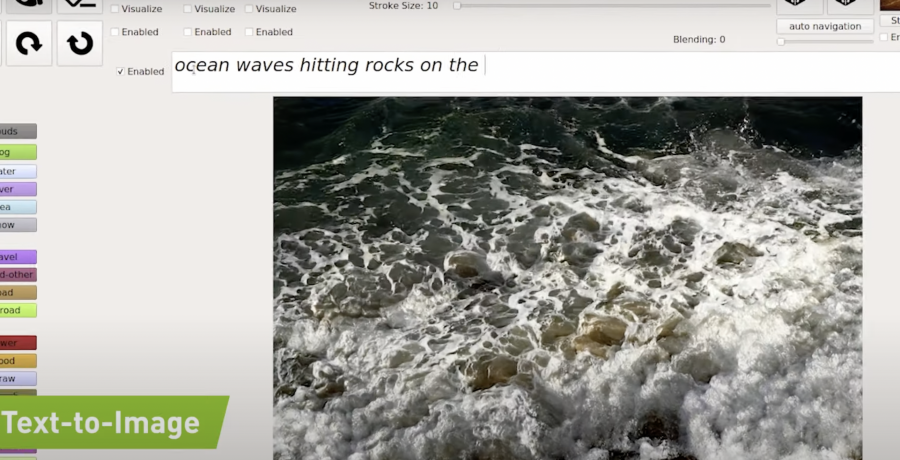

The NVIDIA GauGAN2 neural network, trained on 10 million nature photos, generates realistic images based on a brief description. Then you can add new objects to the image by drawing their sketch by hand.

GauGAN2 implements segmentation mapping, drawing, and text-to-image conversion within a single model, which makes it a powerful tool for creating photorealistic art with a combination of words and drawings.

To do this, the generative-adversarial neural network was trained using the NVIDIA Selene supercomputer. The researchers used a neural network that studies the relationship between words and the visual effects they correspond to, such as ”winter“, ”foggy“ or ”rainbow”.

After generating the image, you can create a semantic segmentation map that shows the location of objects in the scene. This scene can be completed with simple sketches, for example, the sky, a tree, a rock, or a river.

Images with tags, “neural network” = search tags from images