In a novel paper, researchers from the Seoul National University in Korea have proposed an accurate method for 3D human pose and mesh estimation from a single image.

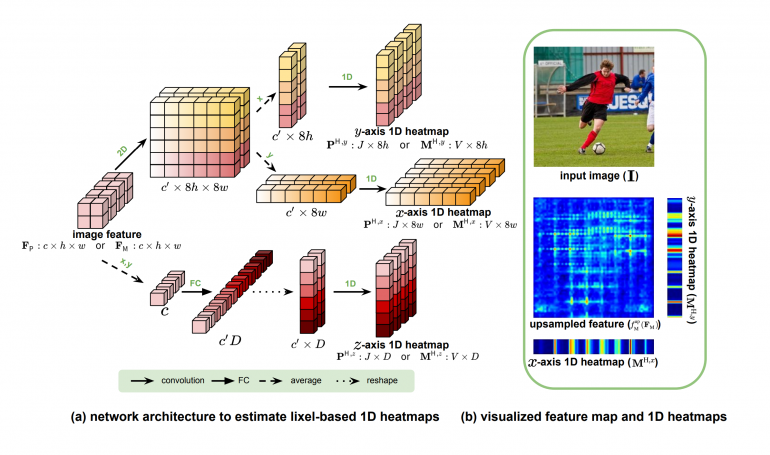

Typically human pose estimation methods work by learning a mapping between the input pixels and the parameters of a human mesh model. In contrast, the new method works by predicting a lixel-based 1D heatmap before predicting the final output pose and mesh. The so-called “lixel” is in fact short for “line + pixel” and the idea behind the introduction of lixels is the preservation of spatial relationships in the input image. According to researchers, the produced high-resolution 1D heatmaps allow for precise dense mesh vertex localization.

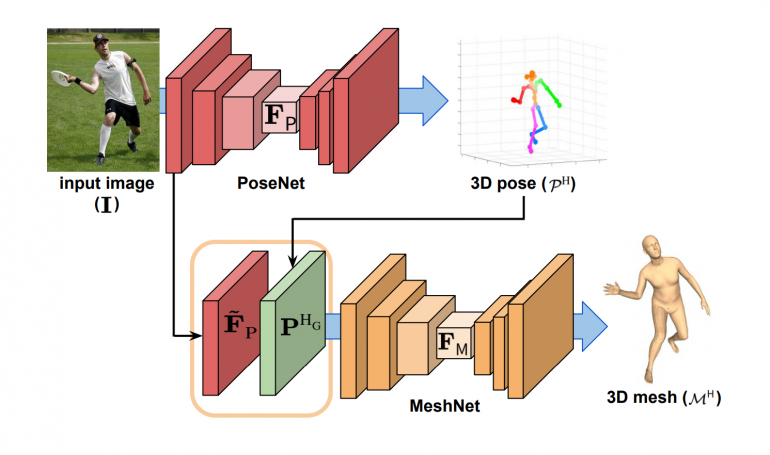

They propose an overall pipeline for both human 3D pose and mesh estimation, consisting of two deep neural network models: PoseNet (for human pose estimation) and MeshNet for regressing 3D human meshes. The diagram below shows the network architecture that researchers use for estimating the lixel-based 1D heatmaps.

The PoseNet model estimates three lixel-based 1D heatmaps of all human joints given the input image. The MeshNet network takes as input the image feature from PostNet and a 3D Gaussian heatmap in order to produce the final 3D human mesh.

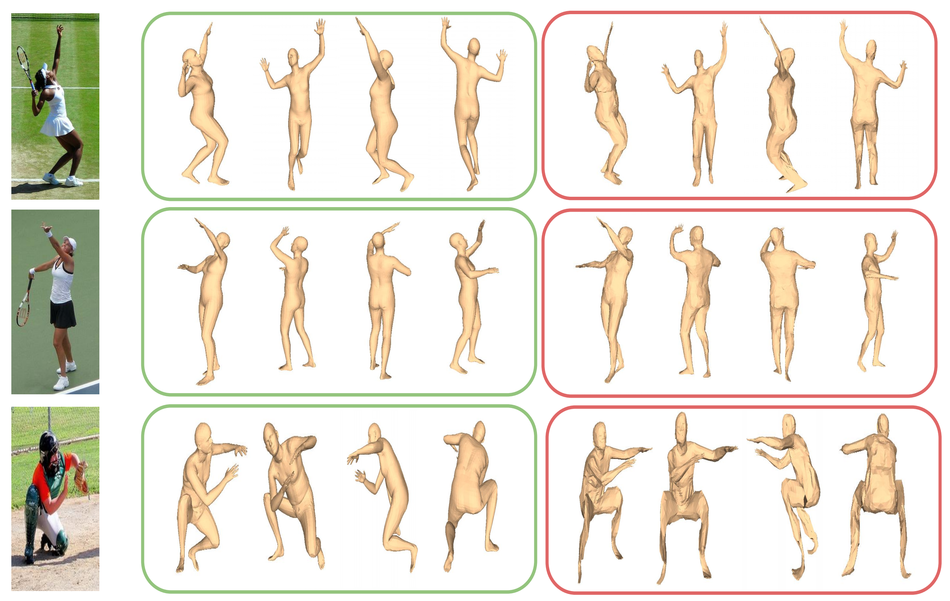

Researchers conducted experiments on several benchmark datasets: Human3.6M, 3DPW, FreiHAND, MSCOCO, MuCo-3DHP. Results showed that the method achieves state-of-the-art performance.

More details about the method can be read in the paper published on arxiv. The implementation was open-sourced and it is available on Github.