LambdaNetworks – researchers propose a new type of deep neural networks that are computationally efficient while maintaining on-par performance with existing classification models.

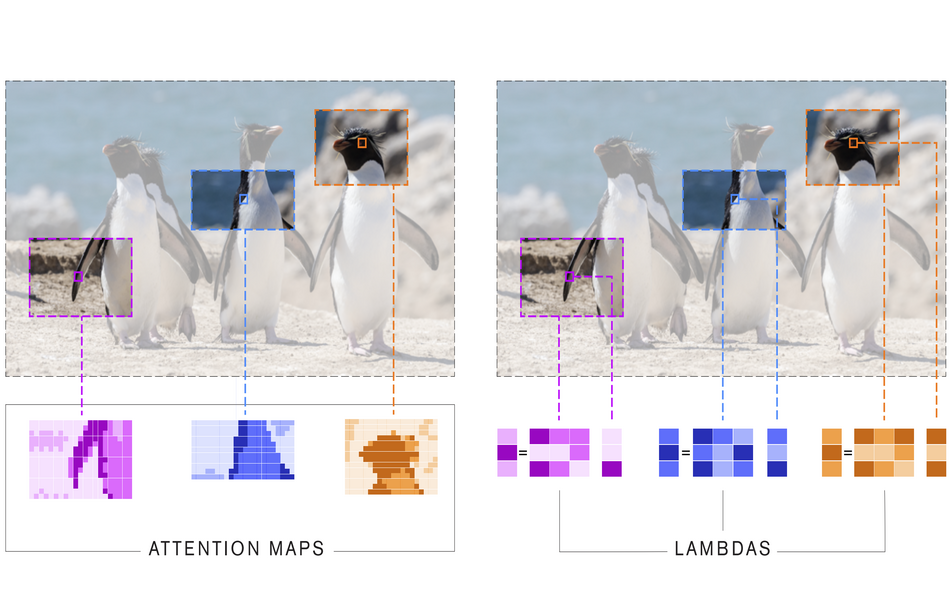

Based on an interesting idea, Lambda Networks are able to capture long-range interactions and perform tasks on structured data in a computationally efficient manner. In contrast to deep neural networks based on attention, these novel networks are able to capture interactions between elements and the context by introducing linear functions, hence the word lambdas.

The idea here is to transform each context in a structured collection into a linear function applied to a so-called “query”. These linear functions are modeled in the framework of neural networks as lambda layers. In a lambda layer, the input and the context is given to the layer are used to generate a linear function lambda that is applied to queries at inference. According to researchers, the lambda layers are more efficient than attention and self-attention layers in terms of both: time and space complexity.

To evaluate the performance of the newly proposed lambda layers and networks, researchers applied the lambda layer on ImageNet classification task using ResNet architecture. Results showed that the lambda network reaches higher accuracies than self-attention mechanisms and at the same time it remains computationally efficient. Besides the image classification, researchers experimented also with COCO object detection and instance segmentation benchmarks. They showed that in terms of speed-accuracy trade-offs the novel Lambda Network architecture outperforms state-of-the-art networks.

The implementation of Lambda Networks was open-sourced and it is available on Github. The paper submitted at ICLR 2021 and double-blind review can be found here.

object detection; abnormal detection;