Recently, a novel and powerful deep variational autoencoder was proposed, called IntroVAE. The model was able to learn how to generate highly-realistic images. The key feature of this model was that it was able to self-evaluate the quality of its generated images and using those evaluations it was improving itself.

In a recent paper, researchers from the Department of Electrical Engineering at Technion have examined the IntroVAE model in detail and proposed a few improvements that improve training stability but also enables theoretical analysis of the generative model.

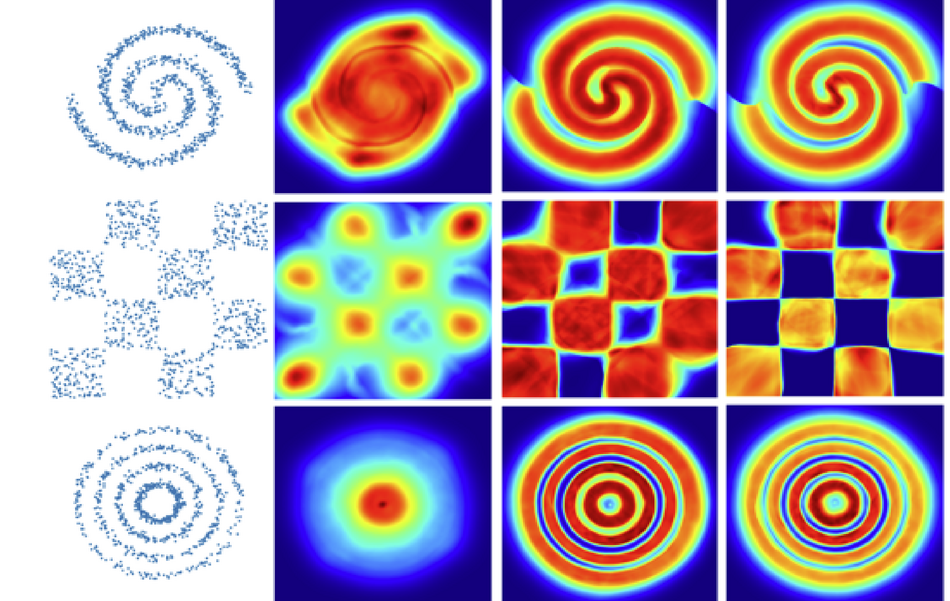

The novelty in the IntroVAE model was that it was adversarially trained i.e. researchers optimized an adversarial objective. The model was trained with a specific hinge loss coming from the encoder and decoder adversarial objectives, and this resulted in training instability as well as loose convergence guarantees. This fact inspired researchers to dive deep into the training of IntroVAE and proposed a modified loss function – a smooth exponential loss resulting in a new model that they called Soft-IntroVAE.

Through detailed analysis and experiments, researchers showed that Soft-IntroVAE exhibits training stability while maintaining good image generation performance. Using several wide-known datasets such as CIFAR-10, CelebA-HQ, FFHQ, etc., they verified the potential of the proposed method with respect to several tasks including image generation, image translation, out-of-distribution (OOD) detection, and others.

More details about the new model, the experiments, and the theoretical analysis can be found in the paper published on arxiv. The PyTorch implementation of the Soft-IntroVAE model was open-sourced and it is available on Github.