We have written before about Google’s new Vision Transformer model which successfully applied the powerful Transformer architecture to a computer vision problem. Originally designed for natural language processing tasks, transformers are now starting to be applied to image tasks as well, hoping to achieve the same unprecedented performance as in NLP.

Today, Facebook AI has announced a new method to train computer vision models that leverages Transformers. The new technique, called DeiT or Data-efficient Image Transformers makes it possible to train high-performing computer vision models with far less amount of data.

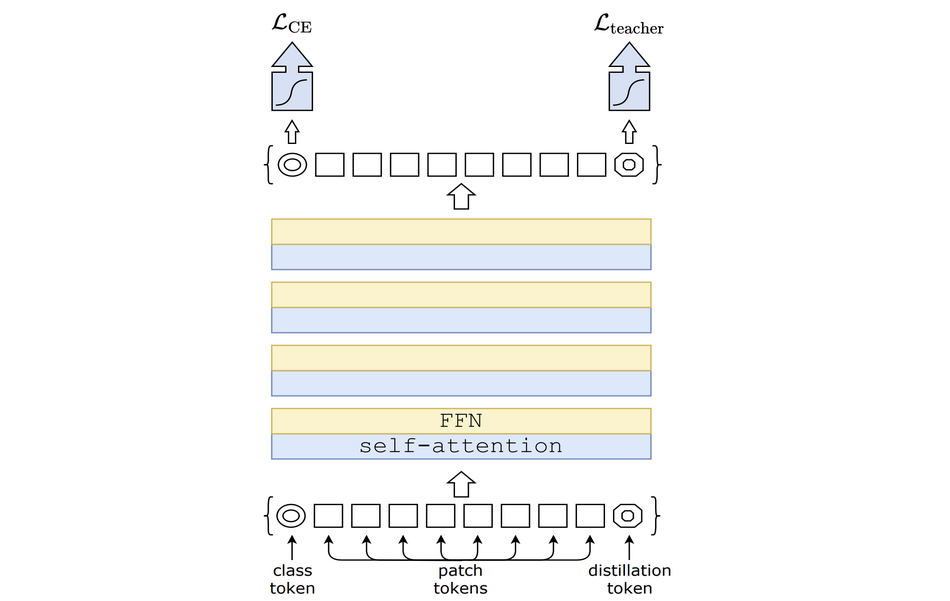

Considering that Transformers do not use convolutions, and therefore cannot assume any prior on image data, it is usually known that they would require a substantial amount of data to learn something useful. However, researchers from FAIR and Sorbonne University have found a training strategy that can train an image classifier with a lesser number of images. Using a student-teacher learning framework and applying data augmentation coupled with optimization tricks and regularization, they were successful in training a good image classifier with 1.2 million images.

The proposed framework was proven to be effective and it opens up many possibilities for further exploring transformers for computer vision. Researchers compared the new model with several other models including EfficientNet and Google’s ViT and results showed that the new model exhibits comparable or even superior performance.

More details about the new method can be read in the paper. The implementation was open-sourced and can be found here.