Researchers from OpenAI have presented their new model that can generate realistic images given text descriptions.

The model, called DALL-E is based on OpenAI’s powerful GPT-3 model and can generate novel and interesting images presenting the subjects in the query text in a plausible way. The name DALL-E was inspired by Salvador Dali and Pixar’s WALL-E, mention researchers in their blog post. It expects a sentence as an input and the model is able to combine different concepts and provide a nice image as an output.

DALL-E is a transformer language model that gets the text and the image together as a single input in the form of a tokens array. It was trained using maximum likelihood and it is able to generate all the tokens one after another. According to researchers, the idea behind the new model came from the success of GPT-3 as a language model but as well from the success of transformer architecture applied to image generation (in ImageGPT model).

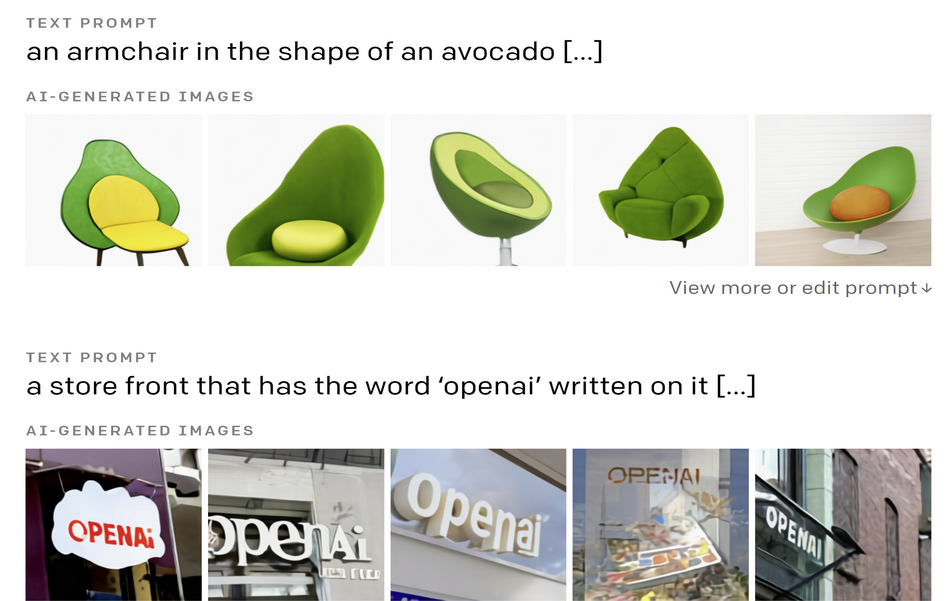

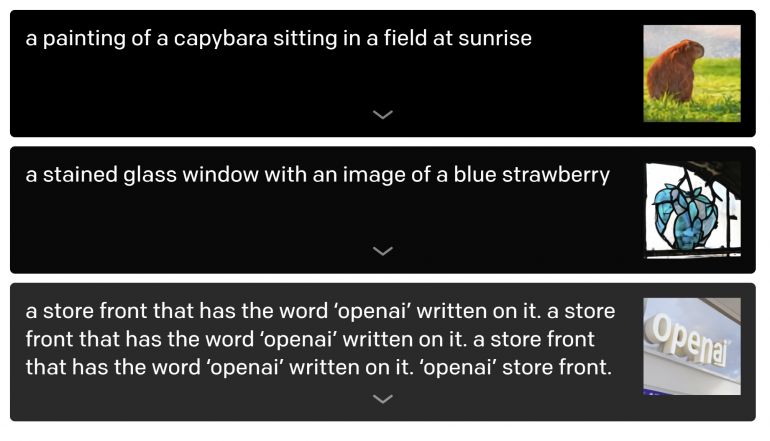

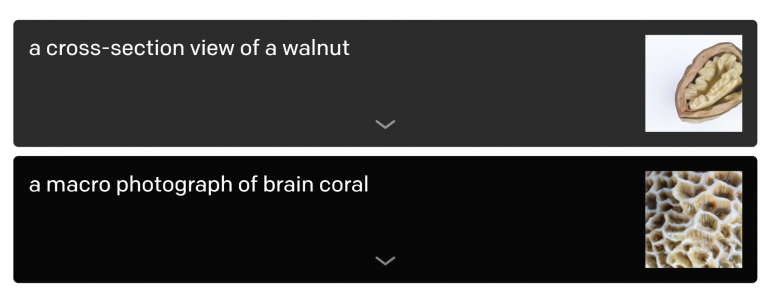

Researchers performed an extensive analysis of the capabilities of the new model towards the manipulation of visual concepts through language. They tested the model on a wide range of problems such as: drawing multiple objects, learning perspective and 3D, differentiation between internal-external, contextual details, etc. Some examples from queries and the model’s output can be seen in the images below.

The new model showed that transformers have great potential to be utilized in problems such as text-to-image synthesis. DALL-E with its 12-billion parameters was able to learn correlations between visual concepts in language and it was able to produce realistic and novel outputs based on the input text.

More details about the model and the experiments can be read in the official blog post.