In their new paper, researchers from OpenAI introduce a new metric which evaluates the robustness of neural network classifiers against unforeseen adversarial examples. The new metric, called UAR (Unforeseen Attack Robustness), should assess if a model that is robust against one distortion type is adversarially robust against other distortion types.

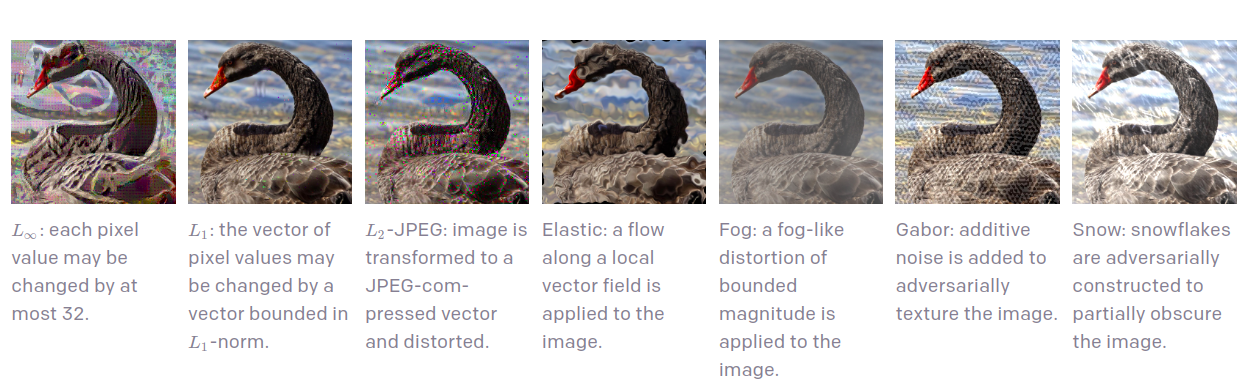

Past research has shown that deep neural network classifiers are vulnerable to carefully crafted adversarial examples, which may be imperceptible to the human eye, but can lead the model to misclassify the output. In recent times, different types of adversaries based on their threat model leverage these vulnerabilities to compromise a classifier network. Defense mechanisms against these attacks differ and therefore the performance of a classifier should be assessed upon different and “unforeseen” adversarial attacks.

For this reason, the team of researchers led by Daniel Kang and Yi Sun, have developed a new method that yields a more descriptive metric to evaluate classifier’s performance against adversarial examples. The summary metric – UAR is defined by the best adversarial accuracy achieved by adversarially trained models against distortions of several known types. This together with the distortion sizes are used to define a formula which gives the UAR score for a given model.

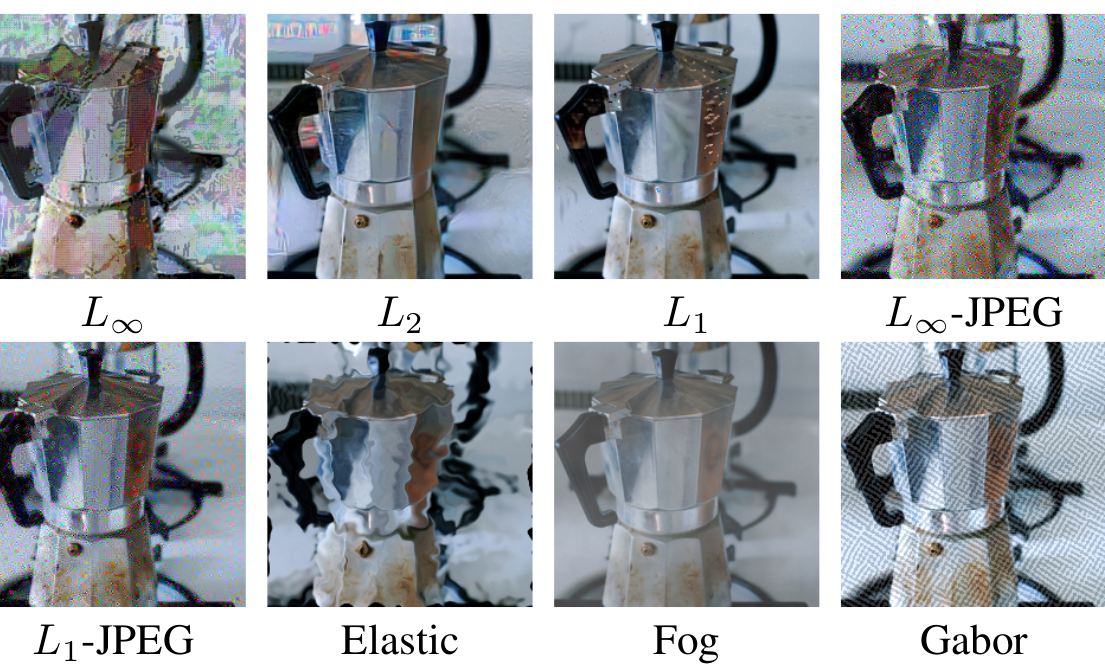

Researchers constructed novel adversarial attacks (using JPEG, Fog, Gabor filters) in order to study the adversarial robustness against unforeseen attacks. Using their methodology, they showed that evaluation against a given attack may not generalize well to other “novel” or previously not seen attacks.

The implementations and the code to evaluate the UAR metric was open-sourced and are available on Github. More in detail about the methodology and the new metric for adversarial robustness can be read in the paper or in the official blog post.