A group of researchers from Tel Aviv University has developed a deep neural network model that reconstructs surface meshes directly from a given input point cloud.

In their recent paper, named “Point2Mesh: A Self-Prior for Deformable Meshes”, researchers describe their novel approach for mesh reconstruction which leverages the power of deep neural networks to produce smooth and locally uniform surface meshes. They argue that previous approaches have tried to solve this hard problem by introducing manually designed priors or where deep learning was employed, priors were learned in a supervised way through training.

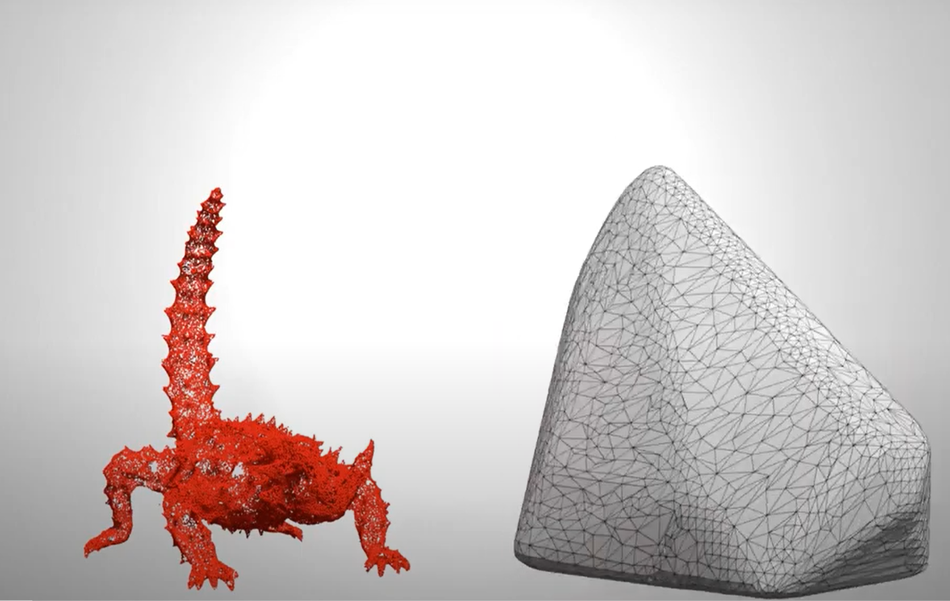

In the proposed framework, the prior or so-called self-prior is learned directly from a single input point cloud. Point2Mesh in fact optimizes a CNN-based prior at inference time by deforming an initial mesh to shrink-wrap the point cloud. A scheme of the framework is given in the image below, showing the different steps of building the prior, and the reconstructed mesh.

The proposed method was evaluated using a real-world 3D scanner sensor (NextEngine 3D Laser) and also using few benchmark mesh datasets including Thingi10K, COSEG, TOSCA, etc.

The results showed that Point2Mesh performs well even on real point cloud scans and it is able to reconstruct surface meshes from noisy, broken point clouds that contain even missing regions.

More details about the method can be read in the paper published on arxiv. The implementation was open-sourced and can be found on Github.