A group of researchers has proposed and developed neural network dissection methods, and have used them in order to explore what role do individual units of a deep neural network actually play.

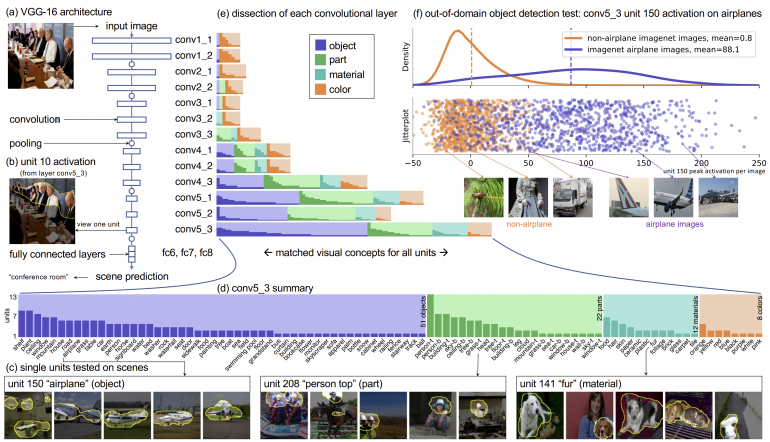

In their paper, “Understanding the Role of Individual Units in a Deep Neural Network” David Bau et al., present an analytic framework for the analysis of individual network hidden units in the context of image classification and image generation. In fact, they analyze two types of networks CNNs and GANs trained for the tasks of scene classification and scene generation respectively.

The analysis is actually conducted such that researchers trace changes that occurred due to the activation or non-activation of individual units or smaller groups of hidden units. The process goes in a way that after identifying potential “responsibilities” of individual neurons, researchers try to remove them and see the performance of the network without those units. An example they give is: removing specific units that detect snow and mountains and seeing how the network behaves when classifying a ski resort.

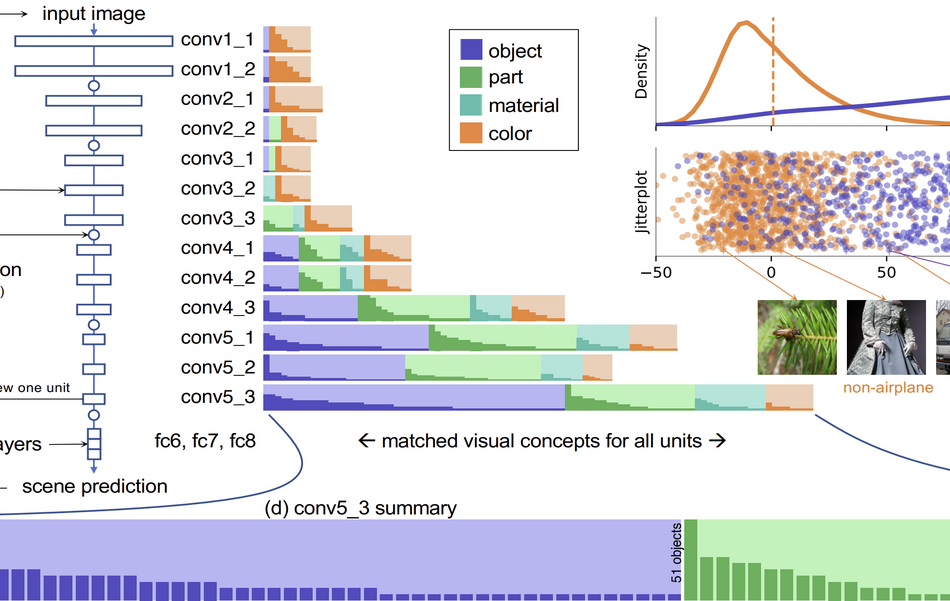

They showed that the networks are gradually learning from color to part, material, and objects as we go deeper into the network structure. Researchers mention the emergence of object detectors inside scene classifiers and in GANs as well, as the most important findings. In addition, they found out that adversarial attacks can be described as attacks on the important units for a given class.

The paper contains an in-depth analysis of all the findings with possible explanations and reasoning behind them. Researchers concluded that a more thorough analysis of the hidden units in a network can give a lot of insights and can potentially change the narrative that neural networks are just black boxes.