Researchers from Google AI have proposed a new way of training sparse neural networks that overcomes the typical issues found in existing dense-to-sparse methods.

Past research has shown that deep neural networks contain sub-networks within themselves, that can perform almost equally well to the large dense networks. These findings of redundancy in dense deep neural networks have led to so-called pruning methods that tend to remove connections and therefore construct a sparse network from a dense one.

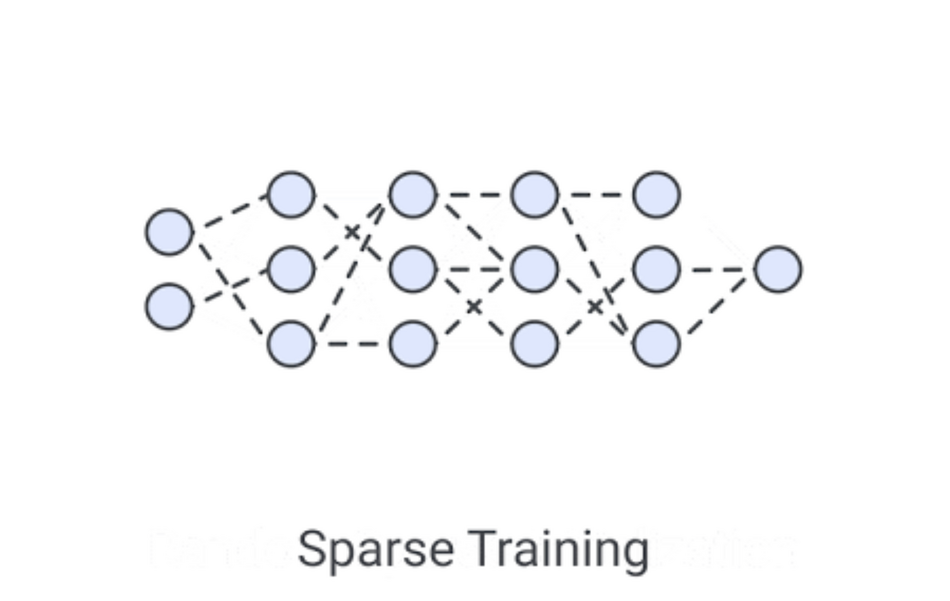

However, these methods require a dense network to be trained and then pruned in a second phase making them very computationally costly and inefficient. To overcome this problem and also the problem of sparse networks being upper-bounded by the dense ones (in dense-to-sparse methods), researchers proposed a method that they name RigL. In their latest paper, they describe a method that trains sparse networks using a fixed parameter count and cost.

The proposed method works by identifying active neurons during training and uses that information to search for a better sparse network. It starts with a randomly initialized network structure. At regular time intervals, the weakest connections are removed, and new connections are activated by using the instantaneous gradient flow. The training procedure is then repeated with the updated network.

Researchers evaluated the new method on the popular benchmark datasets CIFAR-10 and ImageNet-2012. They compared the performance of RigL with existing dense-to-sparse methods and showed that the method outperforms the baseline methods at a wide range of sparsity levels, maintaining also high-performance in the computational cost sense.

The Tensorflow implementation of the RigL method was open-sourced and can be found on Github. The paper was published on arxiv.