A group of researchers from Google has announced the release of a new dataset and benchmark for navigation instruction called Room-across-Room (RxR).

Robotic navigation is one of the major challenges in the field of artificial intelligence. Learning agent navigation in complex environments has been a difficult task over the past decades but researchers have managed to develop agents who are more or less capable of doing so. However, understanding navigation expressed in natural language has been a greater challenge overall. The so-called vision-and-language navigation (VLN) deals with exactly that problem – developing agents that are able to understand spatial natural language.

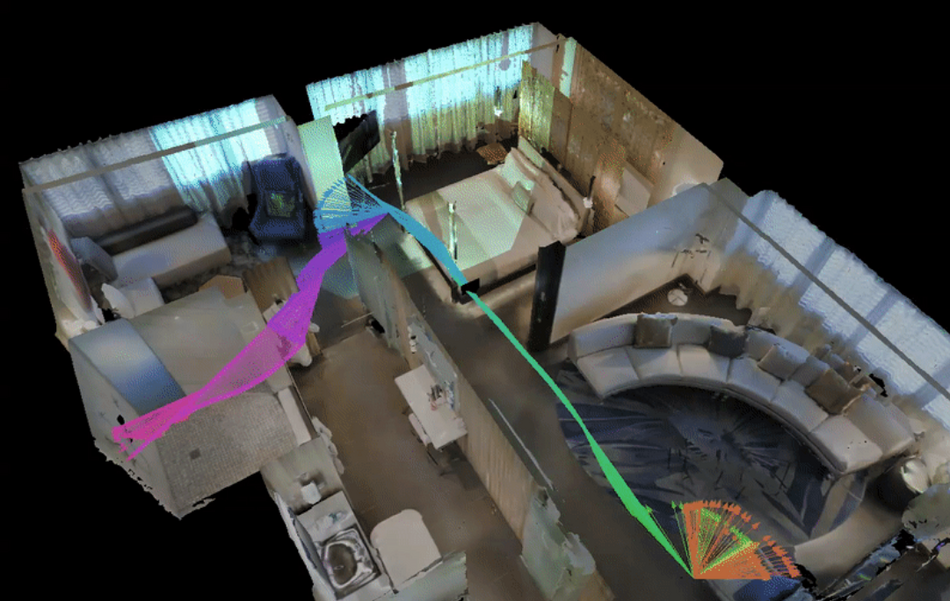

In oreder to foster research in the direction of spatial language understanding and visual-and-languange navigation (VLN) Google researchers collected a novel dataset with human-annotated navigation instructions expressed in multiple natural languages. The dataset called RxR (room-across-room) contains more than 126 000 samples in three languages: English, Hindi and Telugu. Each sample consists of a text snippet which describes how to navigate through an indoor environment. Researchers used the 3D environments from Matterport3D dataset and a photorealistic simulator to build the new dataset. The samples are mostly scenes from offices, homes and public buildings.

According to researchers, this is the largest dataset up-to-date in the area of VLN. To evaluate the performances of the dataset researchers trained agents on the three different languages present in the dataset. They measured the accuracy by comparing the navigation paths and they found out that agents trained on RxR achieve much better performance than random walk. On the other hand, they also report that the capabilities of such agents are way below the human capabilities.

Together with the dataset, reserachers announced the release of a new challenge in the area of vision-and-language navigation – RxR Challenge. The challenge is open for registration and it can be found in the following link. The RxR dataset is available here.