Google has introduced SoundStream , an audio codec based on artificial intelligence that can be used in real time on smartphones. Unlike Lyra, Google’s previous neurocodec, SoundStream works with higher-quality audio and supports encoding more types of sounds, including clear speech, speech against noise, and music.

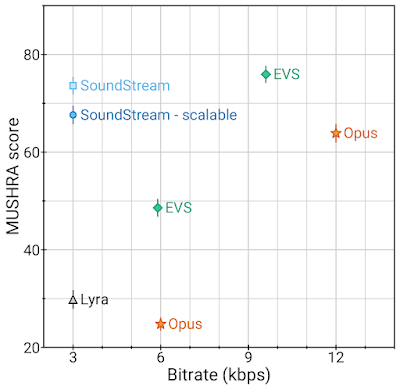

The task of the audio codec is to effectively compress the sound so that it takes up less space and requires less network bandwidth. The best codecs developed in the last few years are Opus (used in Google Meet and Youtube) and EVS (used in mobile communications). Although these codecs have high compression efficiency, recently there has been an interest in using machine learning approaches for audio encoding.

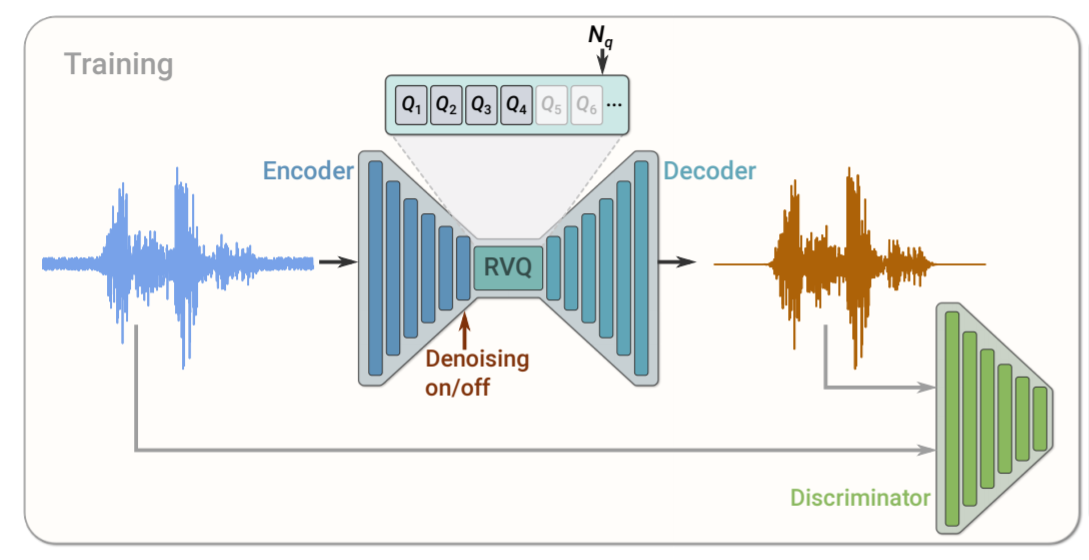

SoundStream is an extended version of Lyra, Google’s neural audio codec for low-bitrate speech. SoundStream consists of a neural network that includes an encoder, a decoder and vector quantization. The encoder converts the audio into an encoded signal, which is compressed using quantization and converted back to audio using a decoder. A discriminator that calculates a combination of loss functions brings the restored sound as close as possible to the original uncompressed audio. After training, the encoder and decoder can be started separately for efficient audio transmission over the network.

SoundStream is the first neural codec capable of working in real time on a smartphone. At a bitrate of 3 Kbit / s, the sound quality after SoundStream exceeds the sound quality after Opus with a bitrate of 12 Kbit/s and is similar to the sound quality after EVS with a bitrate of 9.6 Kbit/s. Thus, audio encoding using SoundStream can provide a similar quality with a significantly lower (3-4 times) bandwidth.