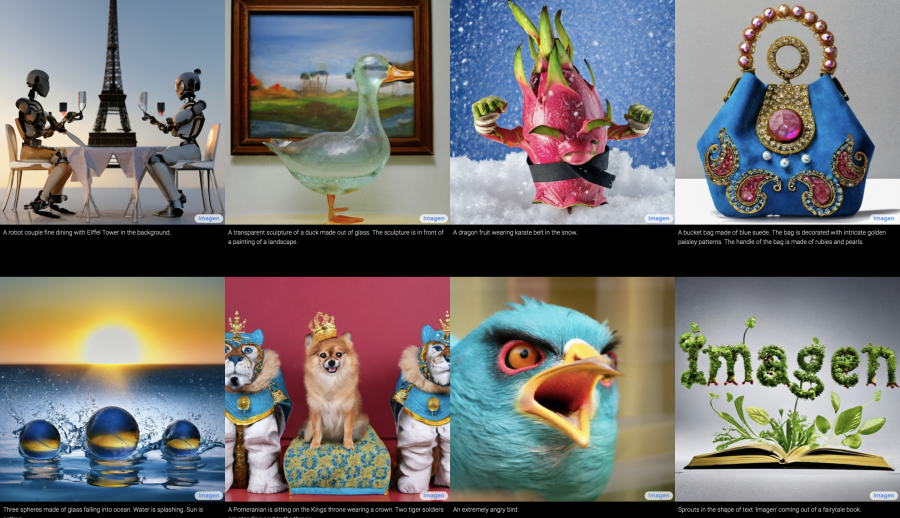

Google has introduced Imagen, a model that transforms a text description into an image with a resolution of 1024 x 1024 pixels. Imagen surpassed OpenAI DALL-E 2 in terms of the degree of realism of images.

Imagen is a combination of transform language models used for text description processing and diffuse models for image generation with consistent resolution improvement. The model was trained on the LAION-400M dataset containing more than 400 million image-text pairs taken from the Internet.

Google tested Imagen in comparison with DALL-E 2 with the help of testers’ ratings. According to the results of this test, the Google model received the majority of positive ratings. In addition, Imagen achieved a new state-of-the-art FID value of 7.27 in the COCO dataset, although it was not trained on images from this dataset.

In addition to Imagen, Google has introduced comprehensive benchmark text-to-speech models DrawBench. At the moment, the company has decided not to release the model to the public, since it is subject to bias of data from the training dataset.