LongWriter is a framework and a set of large language models (LLMs) designed specifically to enable ultra-long text generation, often exceeding 10,000 words while maintaining coherence, quality, and relevance. It extends the capabilities of traditional LLMs by utilizing techniques such as dataset augmentation, context management, and the AgentWrite pipeline, which decomposes long writing tasks into manageable sections. LongWriter models, such as LongWriter-9B, LongWriter-8B, and LongWriter-9B-DPO, are built on top of existing LLM architectures and are optimized for generating high-quality, long-form content across various tasks.

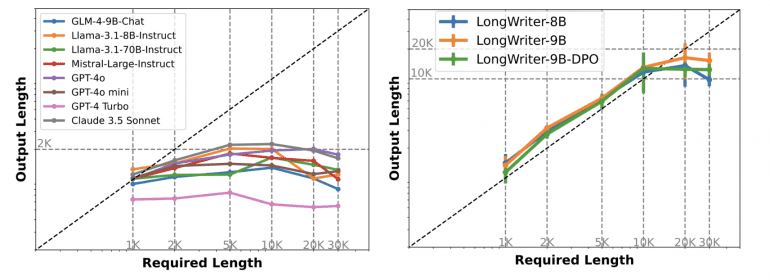

While models like Claude 3.5 and GPT-4 can process up to 100,000 tokens of input, the amount of coherent output they can generate is typically much smaller. The practical limit for output often hovers around 10,000 to 20,000 words (approximately 20,000 to 40,000 tokens), depending on the model and the complexity of the task.

Generating very long outputs (above 20,000 tokens) becomes increasingly challenging because maintaining structure, coherence, and relevance over such extended texts is difficult for even advanced models. Output beyond this range tends to degrade in quality, leading to repetitive or off-topic content. Therefore, while the input context window can be large, the effective limit for high-quality output is usually much smaller.

LongWriter pushes the practical limit for coherent generation to around 20,000 words. This is achieved through techniques like dataset augmentation and enhanced context handling.

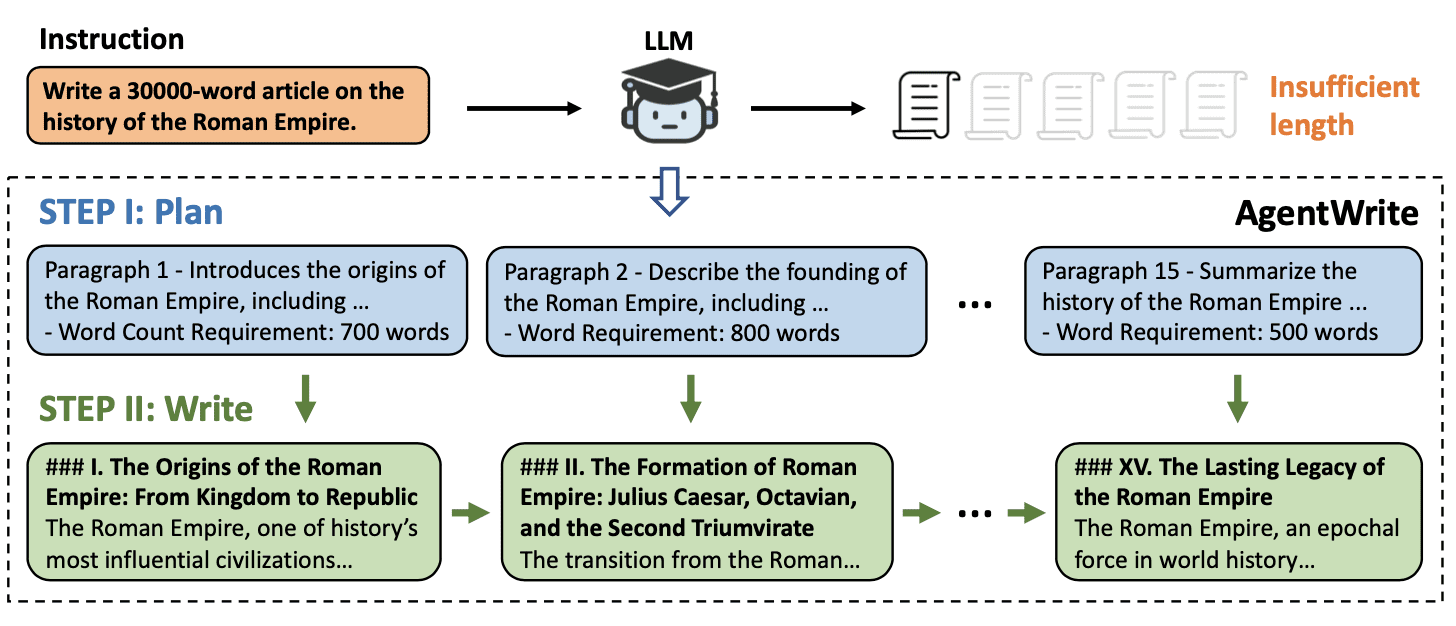

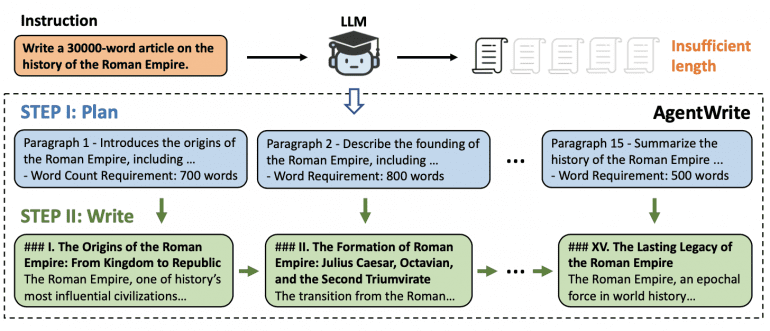

AgentWrite Pipeline

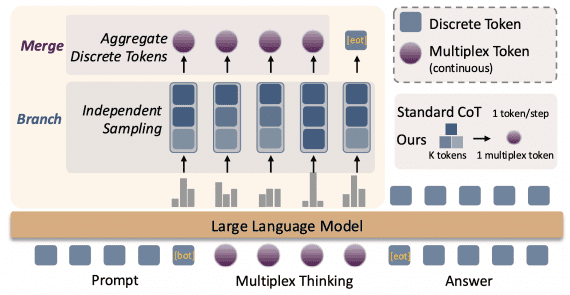

To tackle this limitation, the developers of LongWriter devised AgentWrite, a unique agent-based pipeline that decomposes long writing tasks into smaller, manageable subtasks. AgentWrite generates detailed writing plans based on user prompts, outlining both the structure and the target word count for each section. The model is then tasked with writing each section individually, resulting in coherent outputs that can extend beyond 20,000 words.

LongWriter-6k Dataset

The key to unlocking ultra-long generation lies in data. The LongWriter team developed the LongWriter-6k dataset, a collection of 6,000 SFT data points featuring outputs ranging from 2,000 to 32,000 words. This dataset was integrated into the training process of existing models, significantly expanding their output capabilities. By training on this enhanced dataset, LongWriter scales the output window of LLMs, enabling them to generate texts over 10,000 words without compromising quality.

LongWriter Models

LongWriter builds upon well-established large language models, enhancing their capabilities for ultra-long text generation. These models have been adapted and optimized to handle extended outputs efficiently while maintaining coherence and relevance throughout.

- LongWriter-9B: Based on GLM-4-9B;

- LongWriter-8B: Derived from Llama-3.1-8B;

- LongWriter-9B-DPO: An enhanced version of LongWriter-9B, incorporating Direct Preference Optimization (DPO) to improve quality and coherence in ultra-long outputs.

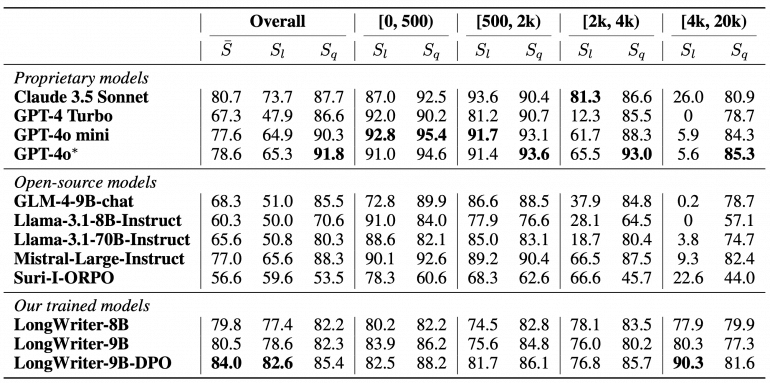

Results

LongWriter’s performance is evaluated using LongBench-Write, a benchmark designed to test output lengths from under 500 words to over 4,000 words. LongWriter’s 9-billion-parameter model outperforms larger proprietary models in generating ultra-long texts.

LongWriter generates long outputs efficiently, ensuring that content remains coherent and logically structured. The AgentWrite process further enhances performance by breaking down tasks into smaller sections, maintaining clarity and flow across lengthy outputs.

Future Directions

LongWriter sets a new standard in long-form AI writing. Future goals include expanding the output window to possibly reach 100,000 words and refining the data pipeline for even higher-quality outputs. LongWriter’s advancements position it as a key tool in fields that require long, high-quality AI-generated content.