Google has introduced a PaLM – language model with 540 billion parameters. PaLM has surpassed existing language models in most benchmarks.

The model is trained using 6144 Google TPU tensor processors on the Pathways parallel computing platform. The training data included combinations of English and multilingual datasets with texts from websites, books, Wikipedia articles, chats and codes from GitHub.

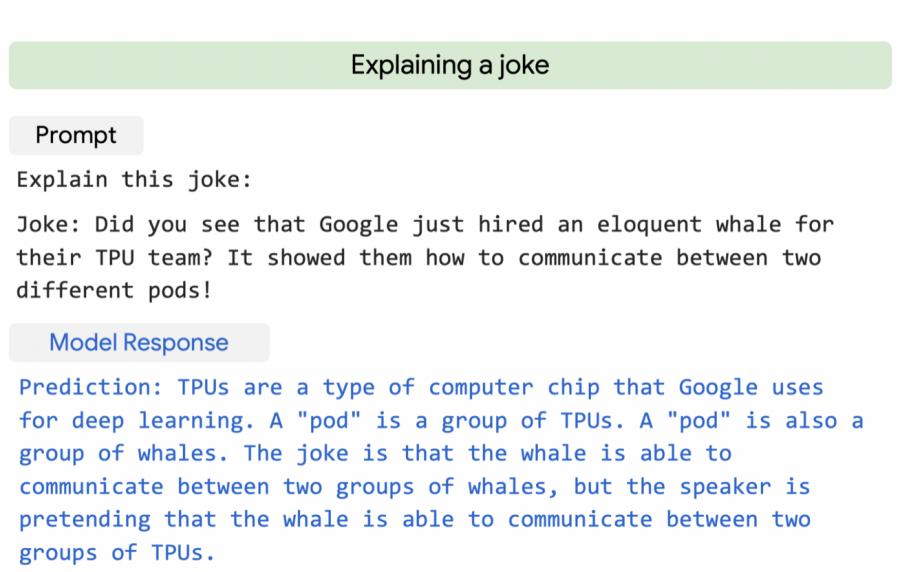

PaLM has been tested in several hundred language comprehension and generation tasks and has shown superiority in most of them, and in many cases – by a significant margin. Among such tasks are determining causes and effects, understanding the content, guessing movies by emoji, searching for synonyms and counterarguments, translating text. In the tasks of answering questions and drawing conclusions, the accuracy of PaLM exceeds the state-of-the-art model by several times.

PaLM demonstrates high accuracy when performing code generation tasks despite the fact that only 5% of the code was contained in the training data. In particular, the accuracy of the model is the same as that of Codex, but at the same time 50 times less Python code is used for PaLM training.