StarCoder is a state-of-the-art method for code correction and generation using neural networks from the research community The BigCode, MIT, University of Pennsylvania, and Columbia University. StarCoder improves quality and performance metrics compared to previous models such as PaLM, LaMDA, LLaMA, and OpenAI code-cushman-001. It works with 86 programming languages, including Python, C++, Java, Kotlin, PHP, Ruby, TypeScript, and others. Swift is not included in the list due to a “human error” in compiling the list. The model code, training data, and model parameters are available in open access on Github.

StarCoder is a fine-tuned model from the same authors as SantaCoder. The code generation with the model can be tested in a sandbox.

StarCoder training

The researchers presented two models:

- StarcoderBase – a compact model trained on 86 programming languages designed only for error correction.

- Starcoder generates new code and corrects errors in existing code and was fine-tuned on 35 billion Python tokens.

The 15.5 billion-parameter model is a fine-tuned Transformer-based SantaCoder (decoder-only) with Fill-in-the-Middle and MultiQuery attention mechanisms.

The model was trained on the The Stack 1.2 dataset, which contains over 6 TB of source code files from open Github repositories, covering 358 programming languages, from which 86 languages with sufficient data for training were selected. Code from repositories was cleaned and anonymized: email addresses, names, and passwords were removed.

Evaluation of StarCoder results

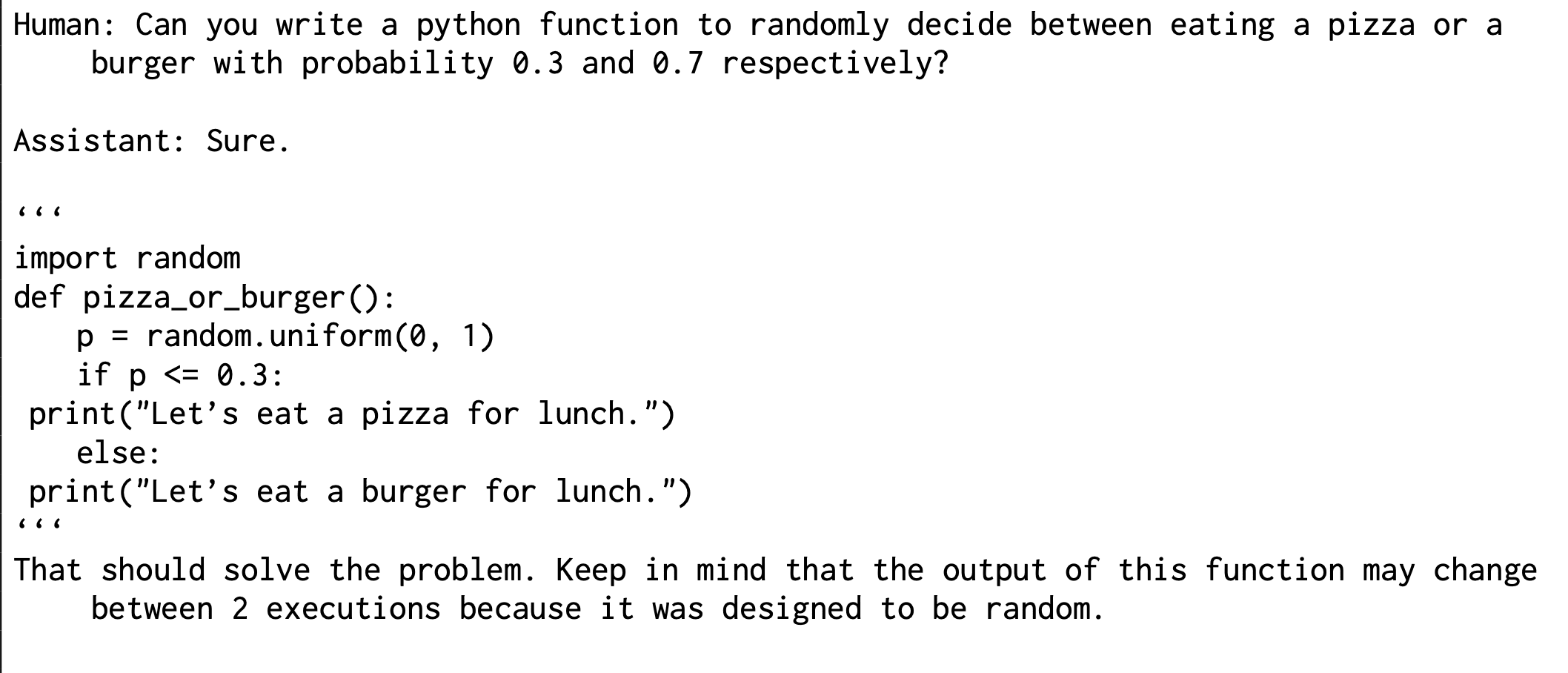

Example of Python code generation from a text prompt using StarCoder

Example of Python code generation from a text prompt using StarCoder

StarCoder was evaluated on several metrics, including HumanEval and MBPP. Significant improvements in accuracy of code correction and generation in Python and Java languages were demonstrated compared to previous models.