VBVR: 2 Million Videos for Reasoning Training — an Open Dataset That Changes the Rules

26 February 2026

VBVR: 2 Million Videos for Reasoning Training — an Open Dataset That Changes the Rules

A team of more than 50 researchers from around the world — from Berkeley, Stanford, CMU, Oxford and other universities — has published Very Big Video Reasoning (VBVR), a massive…

Choosing Between Vendor AI, In-House Builds and Hybrid Delivery

20 February 2026

Choosing Between Vendor AI, In-House Builds and Hybrid Delivery

Most organisations now face a practical choice about how to deliver AI. Should they rely on vendor tools and platforms? Should they build capabilities in-house? Or should they blend both…

GLM-5: Top-1 Open-Weight Model for Code and Text Generation, Competing with Claude and GPT on Agentic Tasks

19 February 2026

GLM-5: Top-1 Open-Weight Model for Code and Text Generation, Competing with Claude and GPT on Agentic Tasks

Zhipu AI and Tsinghua University have published a GLM-5 technical report — currently the top-performing open-weight language model by benchmarks: first place among open-weight models on Artificial Analysis and top-1…

Baichuan-M3: An Open Medical Model That Conducts Consultations Like a Real Doctor and Outperforms GPT-5.2 on Benchmarks

10 February 2026

Baichuan-M3: An Open Medical Model That Conducts Consultations Like a Real Doctor and Outperforms GPT-5.2 on Benchmarks

A research team from the Chinese company Baichuan has introduced Baichuan-M3 — an open medical language model that, instead of the traditional question-and-answer mode, conducts a full clinical dialogue, actively…

Hyper-Personalized Email Marketing: How AI is Killing the “Blast” Method

Hyper-Personalized Email Marketing: How AI is Killing the “Blast” Method

Email marketing isn’t dead—but the spray-and-pray approach certainly is. Sending identical messages to your entire list and hoping for the best no longer cuts it when consumers expect personalized experiences…

Claude Sonnet 4.5 Leads on Comprehensive Backend Benchmark, Outperforming in Both Code and Environment Configuration

22 January 2026

Claude Sonnet 4.5 Leads on Comprehensive Backend Benchmark, Outperforming in Both Code and Environment Configuration

A team of researchers from Fudan University and Shanghai Qiji Zhifeng Co. introduced ABC-Bench — the first benchmark that tests the ability of AI agents to solve full-fledged backend development…

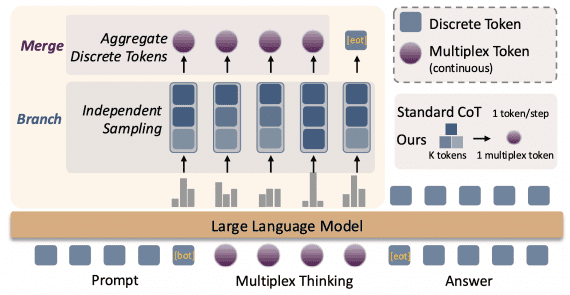

Multiplex Thinking: Sampling 3 Tokens Instead of 1 Increases Olympiad Problem-Solving Accuracy from 40% to 55%

22 January 2026

Multiplex Thinking: Sampling 3 Tokens Instead of 1 Increases Olympiad Problem-Solving Accuracy from 40% to 55%

Researchers from the University of Pennsylvania and Microsoft Research introduced Multiplex Thinking — a new reasoning method for large language models. The idea is to generate not one token at…

Yume1.5: An Open Model for Creating Interactive Virtual Worlds with Keyboard Control

5 January 2026

Yume1.5: An Open Model for Creating Interactive Virtual Worlds with Keyboard Control

Researchers from Shanghai AI Laboratory and Fudan University published Yume1.5 — a model for generating interactive virtual worlds that can be controlled directly from the keyboard. Unlike regular video generation,…

AI Models Are 13% Worse Than Humans at Detecting Generated ASMR Videos

18 December 2025

AI Models Are 13% Worse Than Humans at Detecting Generated ASMR Videos

Researchers from CUHK, NUS, University of Oxford, and Video Rebirth introduced Video Reality Test — the first benchmark that tests whether modern AI models can create videos indistinguishable from real…

Wan-Move: Open-Source Alternative to Kling 1.5 Pro for Motion-Controllable Video Generation

13 December 2025

Wan-Move: Open-Source Alternative to Kling 1.5 Pro for Motion-Controllable Video Generation

A team of researchers from Tongyi Lab (Alibaba Group), Tsinghua University, and the University of Hong Kong presented Wan-Move — a new approach to precise motion control in generative video…

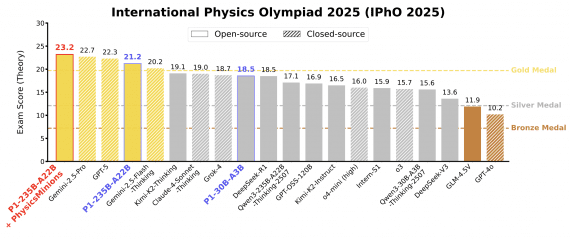

P1: First Open-Source Model to Win Gold at the International Physics Olympiad

30 November 2025

P1: First Open-Source Model to Win Gold at the International Physics Olympiad

P1-235B-A22B from Shanghai AI Laboratory became the first open-source model to win a gold medal at the latest International Physics Olympiad IPhO 2025, scoring 21.2 out of 30 points and…

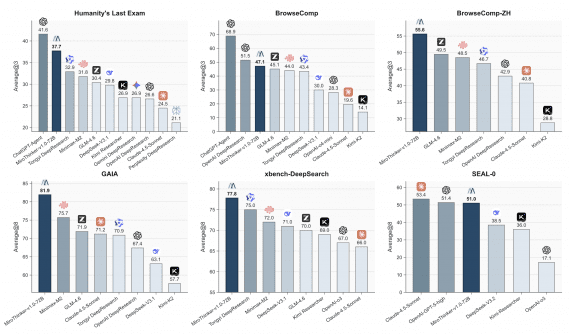

MiroThinker v1.0: Open-Source AI Research Agent Learns to Make Up to 600 Tool Calls Per Task

20 November 2025

MiroThinker v1.0: Open-Source AI Research Agent Learns to Make Up to 600 Tool Calls Per Task

The MiroMind team introduced MiroThinker v1.0 — an AI research agent capable of performing up to 600 tool calls per task with a 256K token context window. On four key…

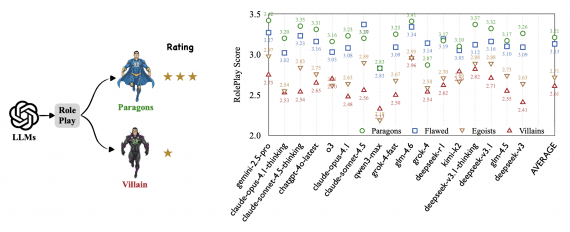

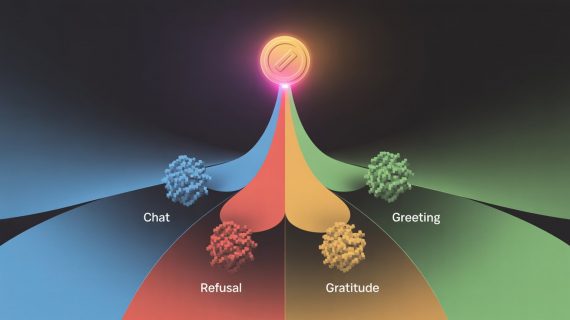

Which AI Can Play a Villain: Comparing Alignment Algorithms Across 17 ModelsRetry

13 November 2025

Which AI Can Play a Villain: Comparing Alignment Algorithms Across 17 ModelsRetry

Researchers from Tencent Multimodal Department and Sun Yat-Sen University published a study on how large language models handle role-playing. It turns out that AI models perform mediocrely at role-playing: even…

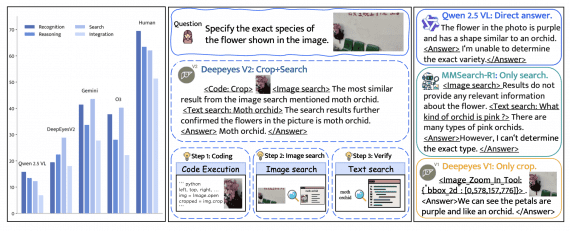

DeepEyesV2: Multimodal Model Learns to Use Tools to Solve Complex TasksRetry

12 November 2025

DeepEyesV2: Multimodal Model Learns to Use Tools to Solve Complex TasksRetry

Researchers from Xiaohongshu introduced DeepEyesV2 — an agentic multimodal model based on Qwen2.5-VL-7B that can not only understand text and images but also actively use external tools: execute Python code…

Remote Labor Index: Top AI Agents Successfully Complete 2.5% of Freelance Projects

4 November 2025

Remote Labor Index: Top AI Agents Successfully Complete 2.5% of Freelance Projects

A team of researchers from the Center for AI Safety and Scale AI published the Remote Labor Index (RLI) — the first benchmark that tests whether AI agents can perform…

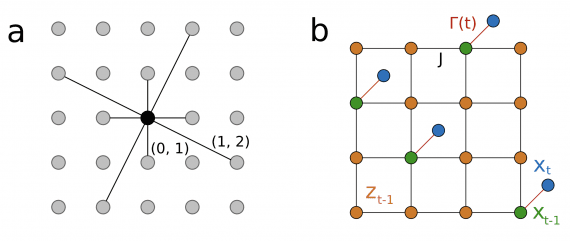

DTM: New Hardware Architecture Reduces Energy Consumption by 10,000x Compared to GPUs

1 November 2025

DTM: New Hardware Architecture Reduces Energy Consumption by 10,000x Compared to GPUs

Researchers from Extropic Corporation presented an efficient hardware architecture for probabilistic computing based on Denoising Thermodynamic Models (DTM). Analysis shows that devices based on this architecture could achieve performance parity…

From Millions Spent on “Thank You” to Efficient Inference: Boilerplate Detection in a Single Token

31 October 2025

From Millions Spent on “Thank You” to Efficient Inference: Boilerplate Detection in a Single Token

Researchers from JFrog published a study demonstrating a method for early detection of boilerplate responses in large language models after generating just a single token. The method enables computational cost…

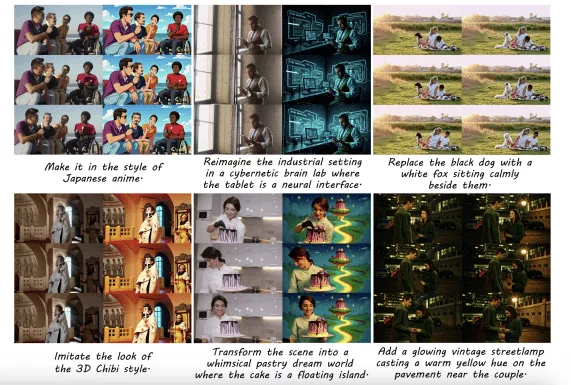

Ditto: Open Framework for Text-Instruction-Based Video Style and Object Editing with 99% Frame Consistency

24 October 2025

Ditto: Open Framework for Text-Instruction-Based Video Style and Object Editing with 99% Frame Consistency

Researchers from HKUST, Ant Group, Zhejiang University, and Northeastern University introduced Ditto — a comprehensive open framework addressing the training data scarcity problem in text-instruction-based video editing. The developers created…

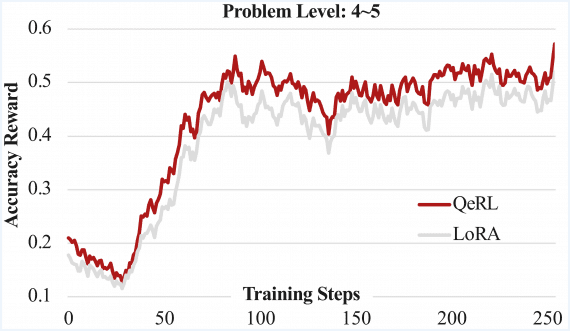

QeRL: Training 32B Models on Single H100 vs Three GPUs, Beating LoRA in Accuracy

16 October 2025

QeRL: Training 32B Models on Single H100 vs Three GPUs, Beating LoRA in Accuracy

QeRL is a framework for training language models using reinforcement learning that simultaneously reduces GPU requirements and surpasses traditional LoRA and QLoRA methods in accuracy. On the Qwen2.5-7B-Instruct model, QeRL…

Kimi-K2 and Qwen3-235B-Ins – Best AI Models for Stock Trading, Chinese Researchers Found

10 October 2025

Kimi-K2 and Qwen3-235B-Ins – Best AI Models for Stock Trading, Chinese Researchers Found

Researchers from China conducted a large-scale comparison of AI capabilities for stock trading using real market data. AI agents managed a portfolio of 20 Dow Jones Index stocks over 4…

MinerU2.5: Open-Source 1.2B Model for PDF Parsing Outperforms Gemini 2.5 Pro on Benchmarks

2 October 2025

MinerU2.5: Open-Source 1.2B Model for PDF Parsing Outperforms Gemini 2.5 Pro on Benchmarks

MinerU2.5 is a compact vision-language model with 1.2 billion parameters for PDF parsing, introduced by the Shanghai Artificial Intelligence Laboratory team. The model achieves state-of-the-art results in PDF parsing with…