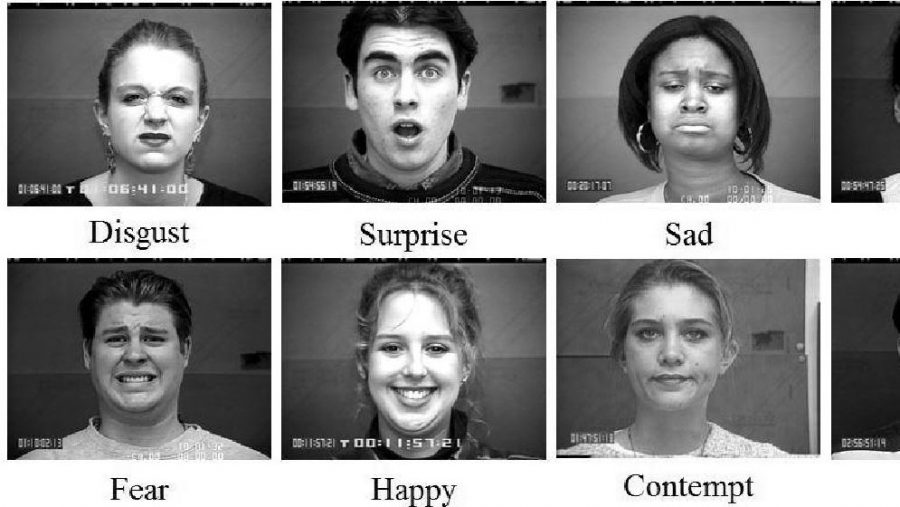

ThoughtWorks, a global technology company working mainly on software development, has open-sourced a Python toolkit for emotion recognition – EmoPy. Created as part of ThoughtWorks Arts, a program which incubates artists investigating intersections of technology and society, EmoPy is a complete solution for Facial Expression Recognition (FER) based on deep neural network models.

According to Angelica Perez, project leader at ThoughtWorks, the need to create such a system emerged with the development of an interactive film experience system called RIOT.

“As we developed new features for RIOT, we generated new requirements for EmoPy, which was created in tandem as a standalone emotion recognition toolkit.” – said Angelica Perez.

The system was built from scratch, inspired initially by the research of Dr. Hongying Meng. Dr. Meng’s time-delay neural network approach has been approximated in the TimeDelayConvNN architecture included in the toolkit.

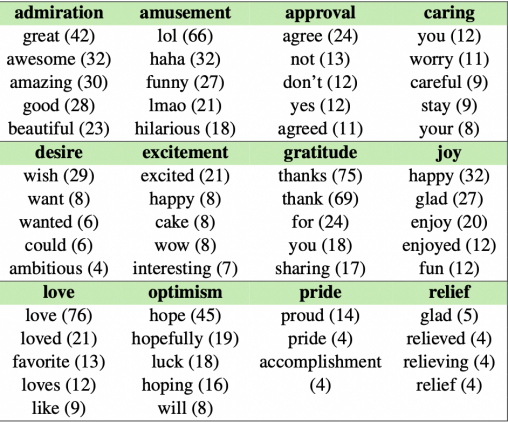

EmoPy was open-sourced, with the intention to become a large-scale project which will make accurate Facial Expression Recognition (FER) models free, open, easy to use, and easy to integrate into different projects. Initially, only a few models were trained and included as part of EmoPy. According to EmoPy’s developers, the best performing architecture was a Convolutional Neural Network. The current models were trained on the Microsoft’s FER2013 and the Extended Cohn-Kanade datasets.

The EmoPy toolkit was released under an unrestrictive license to enable the widest possible access. As an open-source project, ThoughtWorks expects that other contributors will enrich the toolkit with more models, features or functionalities.

More about the development process can be read at ThoughtWorks’s blog post. The project is hosted on Github and this article provides a general overview of human facial expression recognition.