The European Union is finalizing the “AI Act,” the world’s first comprehensive regulatory framework governing the use of artificial intelligence (AI). The European Parliament has approved the draft law with a majority vote of 499 in favor, 28 against, and 93 abstentions. The Act establishes obligations for model providers such as OpenAI and Google, and it is expected to be enacted by the end of 2023.

An Overview of the Act’s Provisions

The Act is a 349-page document outlining the objectives of regulation, integration into the existing legal framework, and requirements for AI model producers.

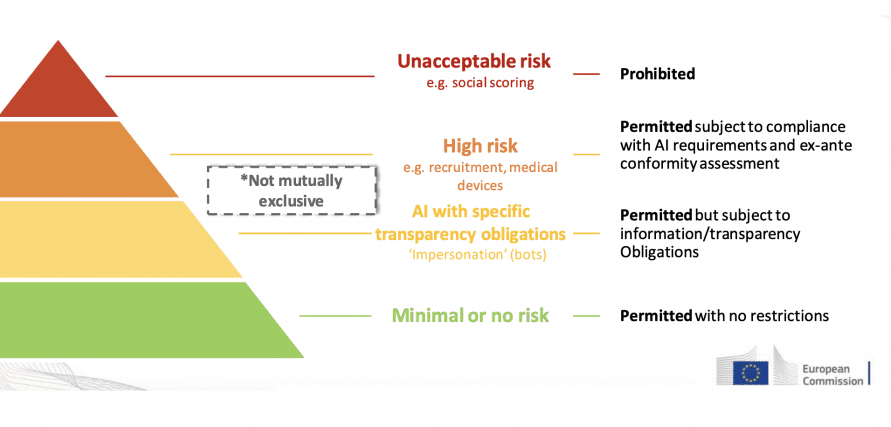

Levels of Risk

All models will be categorized into four risk levels:

- Insignificant (e.g., AI in games, spam filters) – no new restrictions are imposed;

- Limited (e.g., generative models: chatbots, deepfake models, image creation) – developers will be required to inform users that they are interacting with AI, not a human, and disclose the training data used for the models;

- High (e.g., autonomous vehicles, healthcare, recruitment, legal, education) – transparent expert risk assessments will be introduced, only approved datasets can be used, models must maintain action logs, provide comprehensive documentation, and ensure clear information for users;

- Unacceptable (e.g., social scoring) – systems like the Chinese social credit system will not be allowed for use in the European Union.

Implications for AI Developers

All AI model developers will be required to:

- Undergo registration: AI providers, especially those classified as high-risk, must complete mandatory registration to operate in the European Union;

- Ensure transparency and reliability: users must be informed that they are dealing with AI, have control over their data and decisions;

- Protect the rights and safety of citizens: prevent discrimination and unwarranted interference in privacy. The Act also aims to guarantee AI’s safety and prevent misuse;

- Face penalties for non-compliance: failure to meet the requirements may result in fines exceeding €20,000,000 or 4% of the company’s worldwide annual revenue.

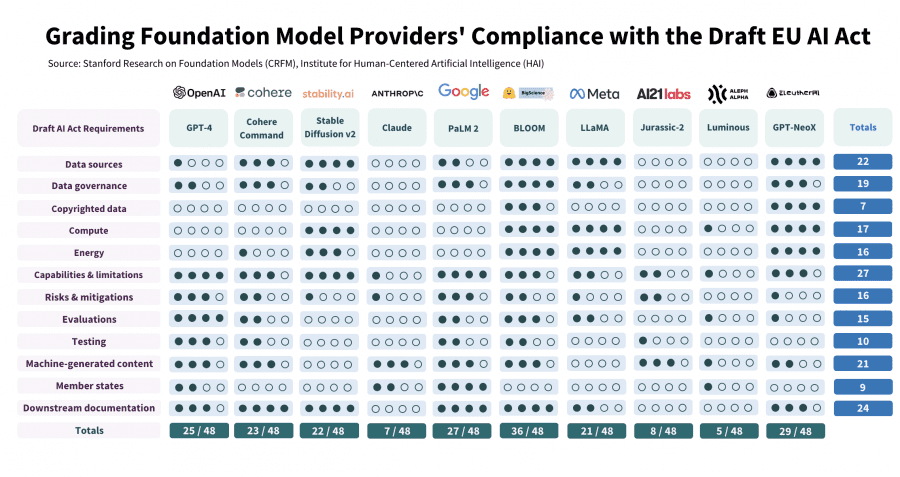

How Far Are Developers from Meeting the Requirements?

Researchers from Stanford University evaluated ten leading language models against the 12 key requirements set by the EU AI Act. Most models scored below 50% of the required points.

Closed-source models like OpenAI’s GPT-4 scored only 25 out of 48 possible points. Google’s PaLM 2 achieved a score of 27, while Cohere’s Command LLM received just 23 points. Anthropic’s Claude ranked second to last with 7 points.

The open-source model BLOOM by Hugging Face performed the best, scoring 36 points. However, other open-source models such as LLaMA and Stable Diffusion v2 scored only 21 and 22 points, respectively.

Conclusion

A notable trend emerged from the overall results: open-source models generally outperformed closed-source models in terms of transparency of data sources and energy consumption. Closed-source models showed better compliance with requirements related to providing comprehensive documentation and risk control.

Researchers caution that a lack of technical expertise may hinder the effective regulation of models by the EU. Despite these concerns, they support the implementation of the EU AI Act, arguing that it will “encourage AI creators to collectively establish industry standards that enhance transparency” and lead to “positive changes in the fundamental model ecosystem.”