Researchers from Facebook AI Research (FAIR) have published a new method called DeepFovea, that uses deep learning for foveated rendering in augmented and virtual reality displays.

Foveated rendering is a technique that uses eye-tracking to reduce the rendering workloads in virtual or augmented reality systems by reducing the image quality. The workload is actually reduced by focusing on quality reduction in the peripheral parts of the image.

These kinds of techniques have been successfully employed in the past and they introduce several benefits especially for delivering AR and VR on mobile devices. In their novel paper, researchers from Facebook AI led by Anton Kaplanyan propose a new reconstruction method called DeepFovea, which learns to reconstruct a peripheral video from a small fraction of pixels.

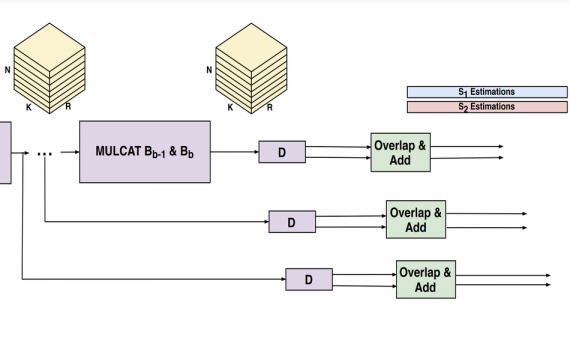

The method leverages the power of Generative Adversarial Networks in order to generate a realistic and perceptually consistent content from sequences with decreased pixel density as input. In a way, the network learns to “hallucinate” missing details – usually contained in the peripheral parts similar to what our brains do. The results show that the network is able to produce naturally-looking video reconstructions from a pixel stream that has reduced the density by 99%.

The new system is hardware-agnostic since it uses raw pixel data to perform foveated reconstruction. According to the researchers, DeepFovea will be of particular use in AR and VR technologies as it can decrease the amount of computing resources needed by as much as 10-14 times while preserving perceptual quality.

More about the method can be read in the official blog post or in the paper. The implementation of the method will be open-sourced and available soon.