Google AI has developed a method for accurately tracking the position of the eyes using smartphones based on machine learning. The neural network can open up new perspectives in the diagnosis of autism spectrum disorders, dyslexia, concussions and strokes.

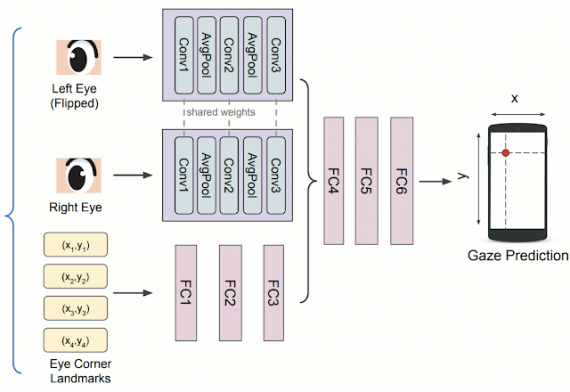

In addition to medical practice, the study of eye movement can be useful in applications such as user experience research, games, and driving systems. However, progress in this area has previously been limited by the need to use specialized hardware trackers, which are quite expensive. The paper, published in the journal Nature Communications, presents a multi-layer convolutional neural network (ConvNet) trained on the MIT GazeCapture dataset (Figure 1). The eye areas extracted from the front-facing camera image serve as input to the convolutional neural network. Fully connected layers combine the output data with the recognized coordinates of the plaza boundaries to determine the x and y pupil coordinates on the screen using an output layer with multiple output regressions. The accuracy of the model has been improved by fine-tuning and personalization for each participant.

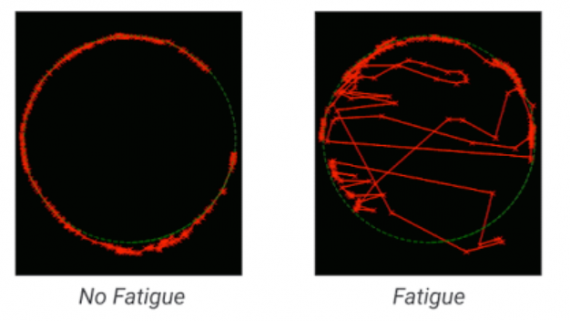

To evaluate the model, the study participants were given the task of looking at dots that appeared in random places on a blank screen. The error of the model was calculated as the distance between the location of the points and the prediction of the model. The results show that while the depersonalized model has a high margin of error, personalization has resulted in a more than fourfold reduction in the margin of error. At a viewing distance of 25-40 cm, this corresponds to an accuracy of 0.6-1°, which is a significant improvement over the 2.4-3° reported in previous studies.

Additional experiments show that the accuracy of the model when working with a smartphone is comparable to modern wearable eye trackers, both when placing the phone on the device stand, and when holding the phone freely in the hand in an almost frontal position of the head. Unlike specialized eye-tracking equipment with multiple infrared cameras near each eye, Google’s model requires only one front-facing RGB camera, is more than 100 times cheaper and easily scalable.