Today, researchers from Google AI in collaboration with DeepMind, have introduced “Dreamer” – a novel, state-of-the-art Reinforcement Learning(RL) agent that learns a world model and uses it to make long-sighted predictions.

The new method follows the typical workflow of model-based reinforcement learning methods that learn a world model, then learn behaviors from predictions given by this world model and in the end, the agent interacts with the environment to collect experience and learn from it. Dreamer leverages two deep neural networks to learn behaviors from the world model predictions: an actor-network and a value network. As a world model, Dreamer uses PlaNet – a deep planning network that learns a dynamics model and was also developed by Google.

To train the actor and value networks, researchers use backpropagation through the predictions of Dreamer’s world model and therefore ensure that the model will learn how small changes to its actions (given by the actor-network) affect the change in rewards that are predicted in the future. The learning paradigm involved in training Dreamer, allows the agent to efficiently learn from thousands of predicted samples in parallel using only a single GPU. Thre three phases or processes of the Dreamer agent can be executed in parallel thus allowing for a significant speedup in the training.

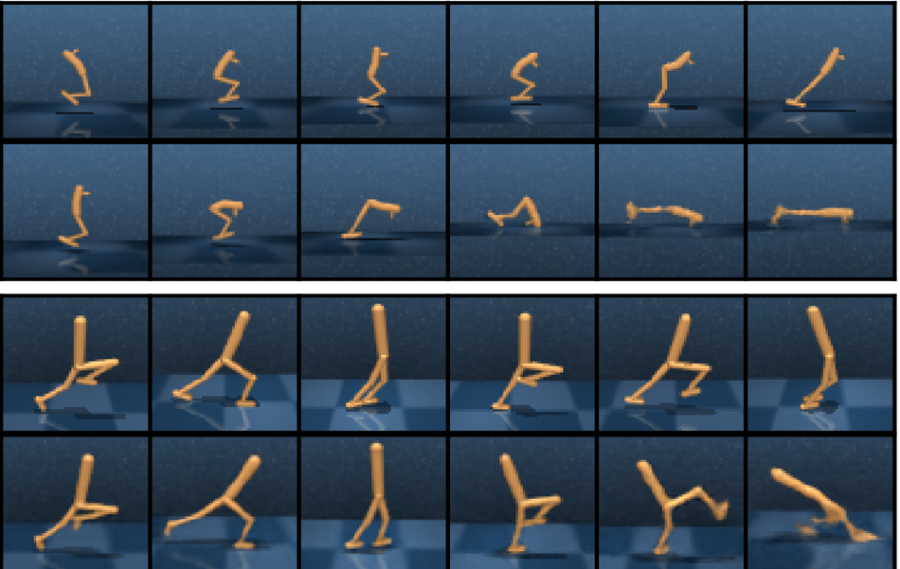

Researchers evaluated the new model on 20 diverse control tasks, such as movement and locomotion, balancing, catching objects etc. The inputs to the model in these tasks are images and the outputs are continuous actions. Dreamer was compared to existing model-based as well as model-free methods such as PlaNet, A3C, D4PG. The evaluations show that Dreamer outperforms the state-of-the-art model-free agent (D4PG) even with 20 times fewer interactions with the environment. It also outperforms the best model-based agents on all the evaluated tasks.

More details about the new state-of-the-art Reinforcement Learning agent can be read in Google’s release blog post or in the paper published on arxiv.