In a collaboration with researchers from Synthesis AI and Columbia University, researchers from Google have developed a new method that can detect transparent objects.

Detection of transparent objects is a very challenging problem both for 2D and 3D sensors and methods. Deep neural networks, especially convolutional neural networks help to solve the problem of transparent object detection from an image, at least to some extent. However, optical 3D sensors such as RGB-D cameras and LIDAR sensors are not able to detect transparent objects. This comes as a consequence of the fact that these sensors rely on some light reflection assumptions which are violated by transparent objects in the scene.

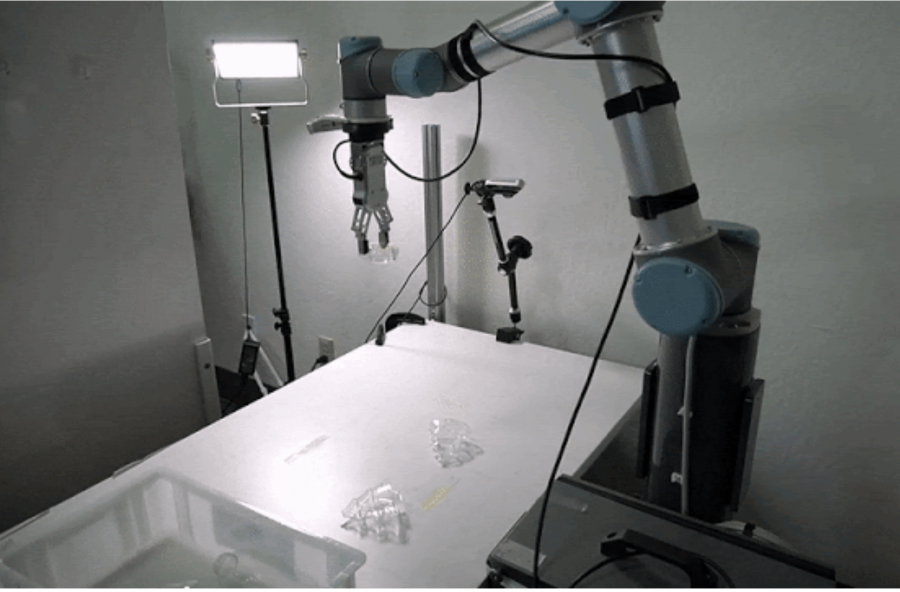

To overcome this problem, researchers joined forces to develop a machine learning algorithm to detect and provide accurate 3D models of transparent objects from RGB-D cameras. Researchers first collected a large-scale synthetic dataset that consists of more than 50 000 photo-realistic samples of transparent objects. The dataset contains several types of information regarding the depicted scene including synthetic depth, surface normals, segmentation masks, edges, depth, etc.

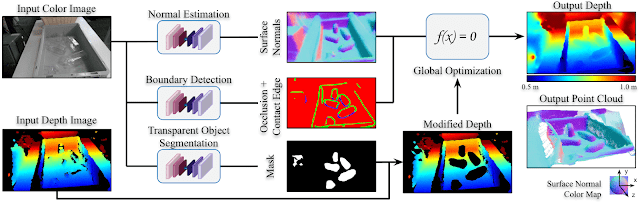

Using the created dataset, researchers developed a method called ClearGrasp, that uses deep neural networks to solve the problem of accurately estimating the depth of transparent objects from RGB-D cameras. The architecture of the method involves three neural networks: surface normals estimator network, boundary detection network and transparent object segmentation network. These networks provide an output which is in the form of surface normals, edges, and transparent objects masks, respectively. These outputs are combined and passed into a global optimization step where the depth of transparent objects is estimated based on the depth and surface normals of the objects present in the scene.

All of the networks were trained on the newly created synthetic dataset. Researchers evaluated the method and the results showed that it is superior to existing alternative methods for depth estimation of transparent objects. They mention that the method generalizes well and can adapt well to real-world images, despite being trained using only synthetic data.

More in detail about the ClearGrasp method can be read in the paper published on arxiv or in the official blog post. The dataset was open-sourced and is available here.