Researchers from Google Research proposed a novel method for generating realistic, high-fidelity natural videos.

In the past several years, we have witnessed the progress of generative models like GANs (Generative Adversarial Networks) and VAEs (variational autoencoders) towards generating realistic images.

However, due to the increased complexity, those models failed in generating realistic videos. Several problems have been encountered when trying to make generative models work well for video data. Researchers designed complex methods for tackling this problem but they failed most of the time.

In a novel paper, named “Scaling Autoregressive Video Models”, researchers from Google propose an autoregressive model which can generate realistic videos.

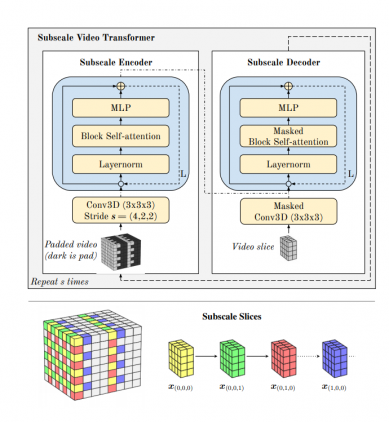

The proposed model is a simple autoregressive video generation model based on 3D self-attention mechanism. In fact, researchers generalized the (originally) one-dimensional Transformer model. They tackled the problem by representing videos as three-dimensional spatio-temporal volumes and applying the self-attention mechanism.

Researchers evaluated the proposed model using two datasets: BAIR Robot Pushing Dataset and Youtube Kinetics Dataset. The results showed that the scaled autoregressive model is able to produce diverse and realistic frames as continuations to videos from the Kinetics dataset. According to researchers, this work represents the first application of video generation models to high-complexity (natural) videos.