Researchers from Universitat Politecnica de Catalunya and Facebook AI Research (FAIR) have proposed a method that given a food image generates the recipe as a sequence of instructions.

Arguing that food recognition is a challenging problem due to high intra-class variability and heavy deformations during the cooking process, researchers proposed a deep learning based method that first predicts the ingredients and then generates cooking instructions.

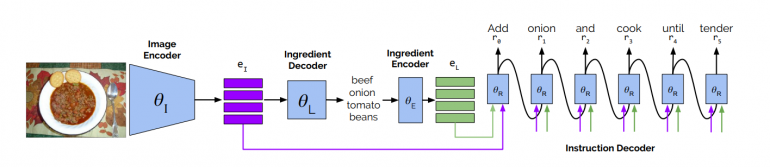

The proposed method consists of several modules including an image encoder, ingredient decoder, ingredient encoder and finally an instruction decoder. The image encoder takes the input image and extracts rich visual features by embedding it into a latent feature space. The ingredient decoder predicts the ingredients by taking as input the image embeddings. Then, the ingredients are passed to an ingredient encoder that generates embeddings for ingredients. Finally, the instruction decoder takes these embeddings and outputs a recipe title among with a sequence of cooking steps.

Researchers trained the models on the Recipe1M dataset that contains more than 1 million sample recipes from all over the web. The evaluation of the method showed that it overperforms other methods for ingredient prediction and that the method is able to generate recipes that are more compelling than using traditional retrieval-based approaches.

The implementation of the method was open sourced as well as the pre-trained models and can be found on Github. The paper was published on arxiv.