A group of researchers from NetEase Fuxi AI Lab has developed a method that uses deep learning to generate in-game characters of players according to an input image.

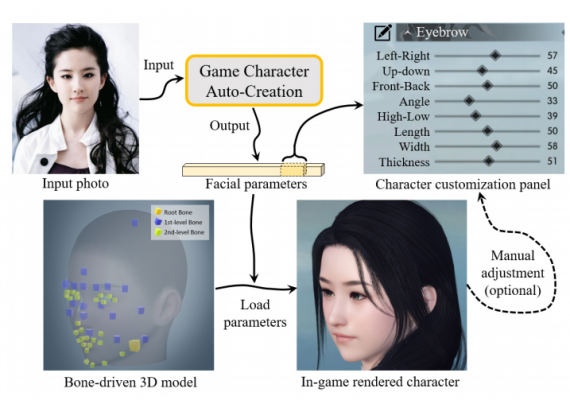

The proposed method takes an input face photo and is able to predict the facial parameters that are necessary to replicate the face into a video game. Researchers leverage the power of deep neural networks or more specifically, deep generative neural networks in a specific architecture in order to solve the task of automatic creation of game characters.

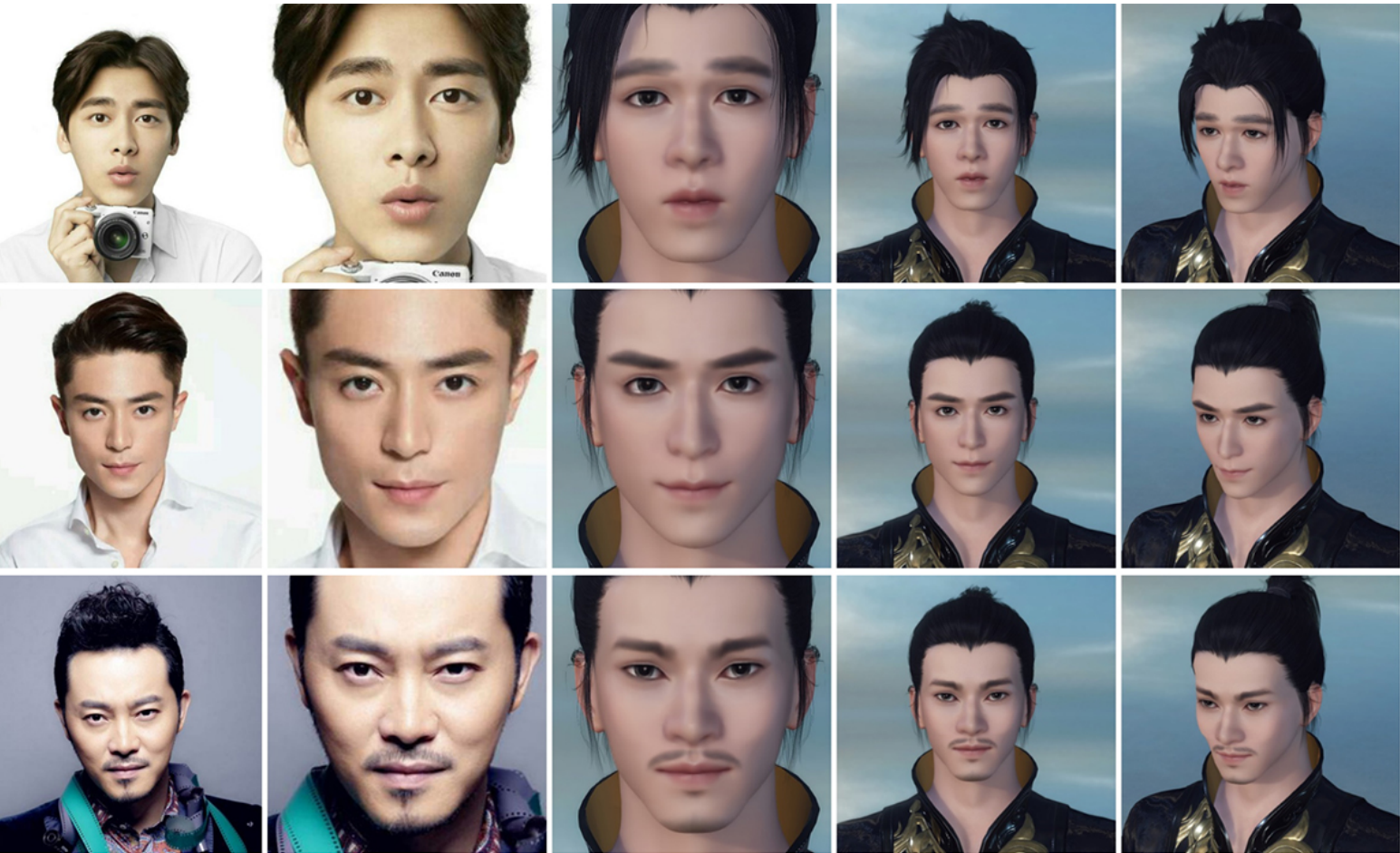

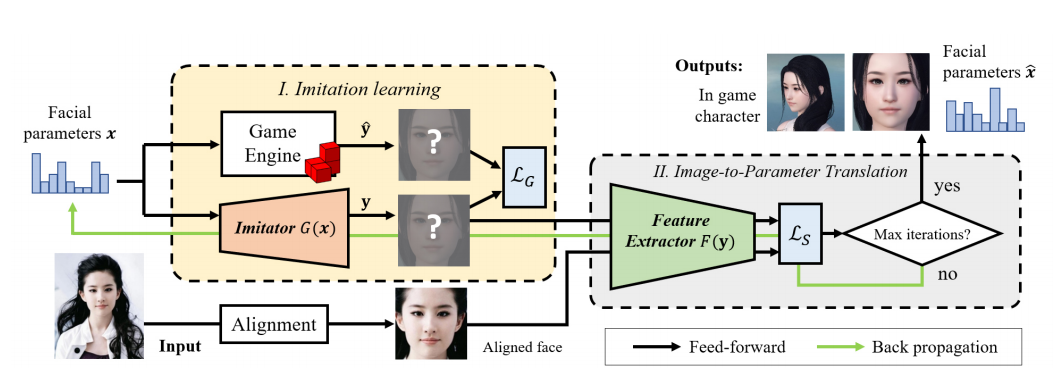

The proposed method consists of two large parts: imitation learning module and image-to-parameter translation module. The first module is taking facial parameters as input and produces a rendered engine, while the second one encodes an aligned image of a person into a lower-dimensional latent space. Researchers trained the architecture using a combined loss function that incorporates two separate loss functions i.e. a “discriminative loss” and a “facial content loss”. According to them, their method achieves plausible results both in terms of similarity between the character and the input image and in terms of local facial details.

The novel method for game character creation was included in a new video game and is being used by players more than 1 million times. More details about the architecture of the network and the method, in general, can be found in the paper published on arxiv.

[…] Source: Neural Network Generates In-Game Characters of Players From A Single Photo […]