A group of researchers from the University of North Carolina and the University of Maryland has developed a method for identifying emotions from people’s walking style.

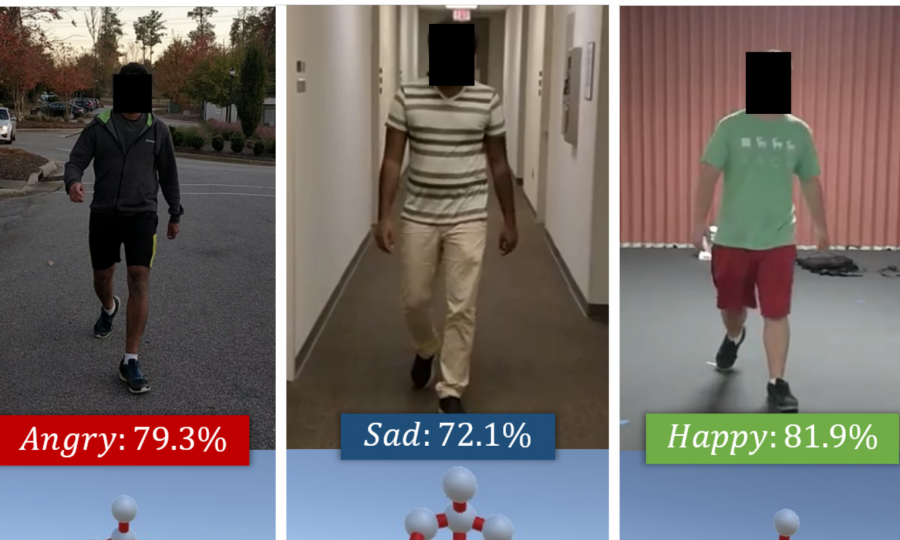

The method, based on deep neural networks is able to extract a person’s walking gait from a video and then perform an analysis to classify the emotional state of the person. Researchers showed that their approach achieves over 80% of accuracy in identifying emotions, as perceived by other humans.

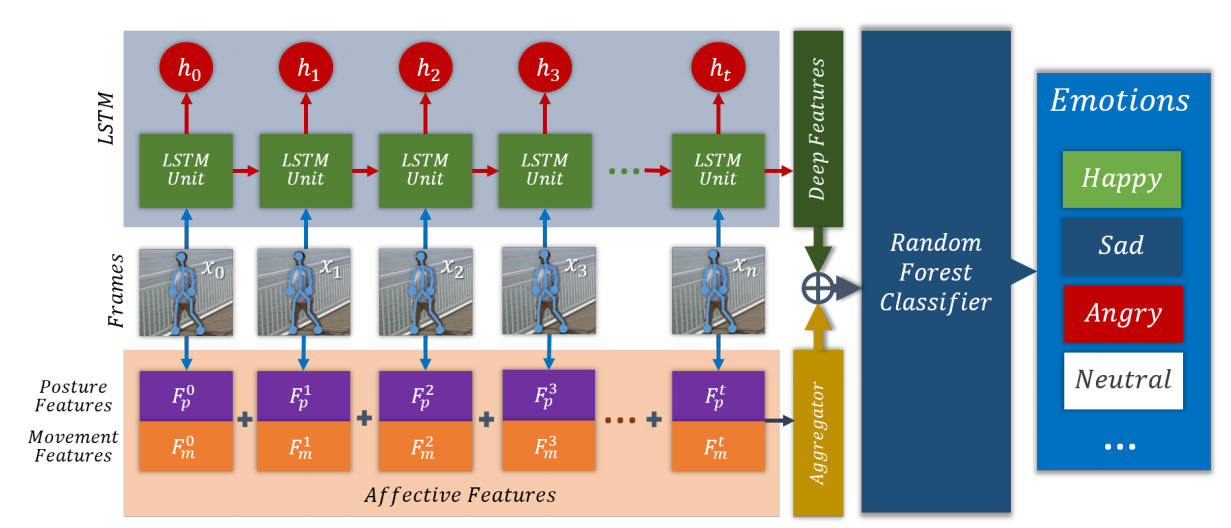

The whole approach is based on previous work on human pose estimation. Researchers use existing state-of-the-art methods on 3D human pose estimation to extract pose-related features. These features are then fed into a recurrent neural network with LSTM cells to extract a sequence of deep features over time. Then, the deep features are aggregated together with the human pose features and input into a Random Forest classifier that classifies the emotional state of the person. In the proposed method the researchers classify into 4 emotional states: happy, sad, angry, neutral.

The method outperforms other emotion classification methods by a large margin. Along with the method, researchers also released a dataset called EWalk (Emotion walk), consisting of videos of walking individuals coupled with labels for emotional state. More details about the method can be found in the paper which is available on arxiv.