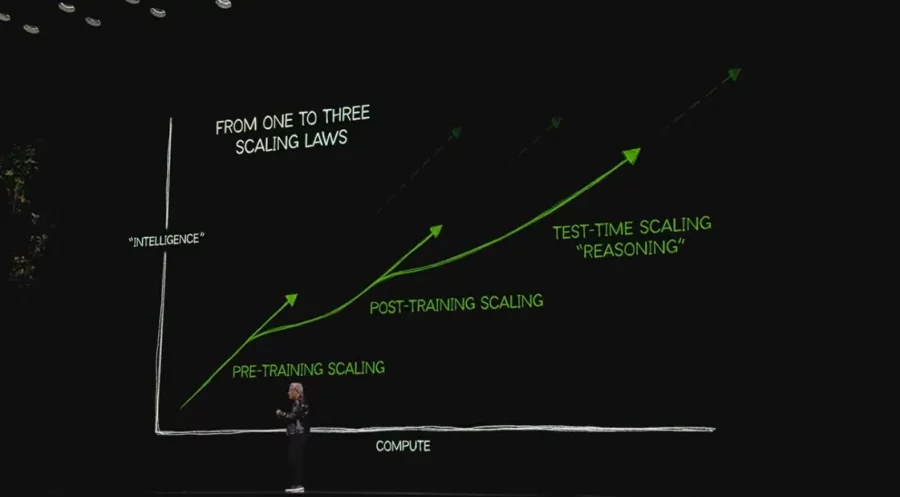

Nvidia just announced a major shift in consumer AI computing at CES 2025, combining new GPUs with a platform for running foundation models locally. The announcement includes next-gen RTX 50 Series GPUs and NIM microservices for AI model deployment.

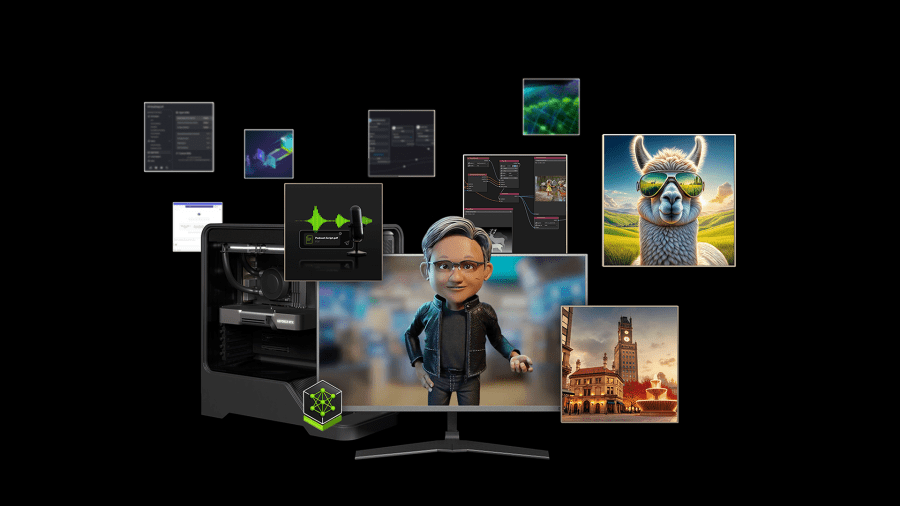

With NIM microservices, Nvidia introduces what they’re calling “democratized AI development” – a system where complex AI features become accessible through graphical interfaces. Until now, working with AI models required programming skills. Nvidia’s new platform changes this with visual tools and pre-built workflows. This moves serious AI computing from data centers to personal computers, potentially changing how people interact with AI tools.

Next-Gen RTX GPUs

The new RTX 50 Series introduces FP4 compute support, halving the memory footprint needed for AI models. The platform includes NIM microservices for deploying models from major providers like Black Forest Labs, Meta, and Mistral.

The Numbers That Matter

- 3,352 trillion operations per second of AI performance

- 32GB of VRAM on new GPUs

- 2x AI inference performance boost with new FP4 compute

- 30% of last year’s AI research papers cited GeForce RTX

New AI-Development Ecosystem

Nvidia is targeting both professional developers and enthusiasts. While pros can still use traditional development tools, newcomers can experiment with AI through visual interfaces. By making AI development visual and intuitive, Nvidia aims to expand the AI developer base beyond traditional programmers to include creators, content producers, and AI enthusiasts.

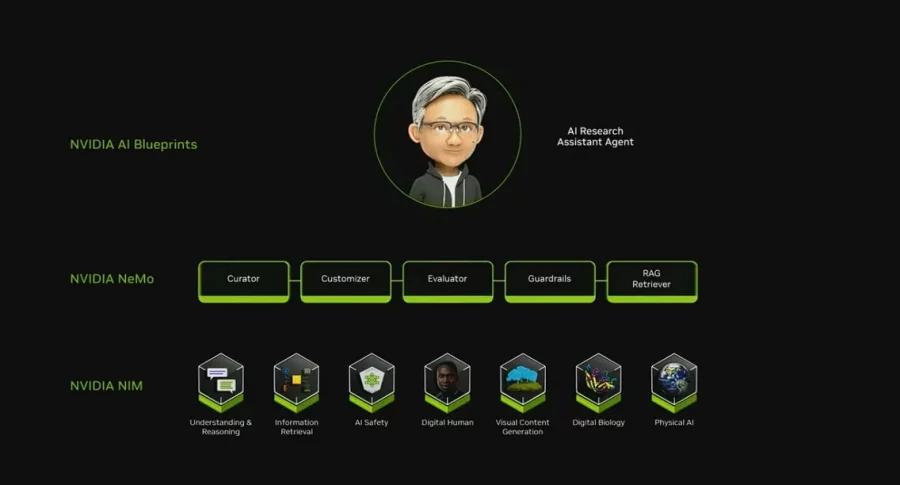

Nvidia isn’t just releasing hardware — they’re building an entire ecosystem for AI development, complete with:

- AI Blueprints for common tasks like PDF-to-podcast conversion

- Project R2X demonstrating practical AI assistants

- Integration with popular frameworks like ComfyUI and LangChain

- No-code tools for non-developers

AI Blueprints: Nvidia’s Ready-to-Use AI Workflows

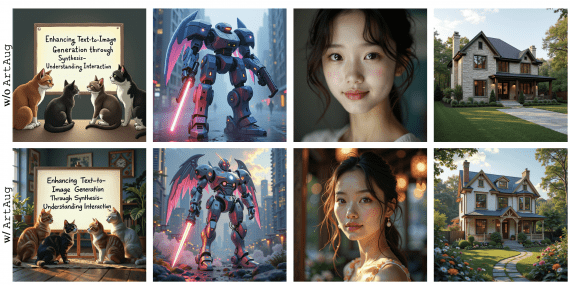

Nvidia is rolling out AI Blueprints – pre-configured AI workflows that run locally on RTX PCs, making advanced AI features accessible through reference implementations. These blueprints transform complex AI processes into ready-to-use applications, enabling users to create AI-powered content without deep technical knowledge.

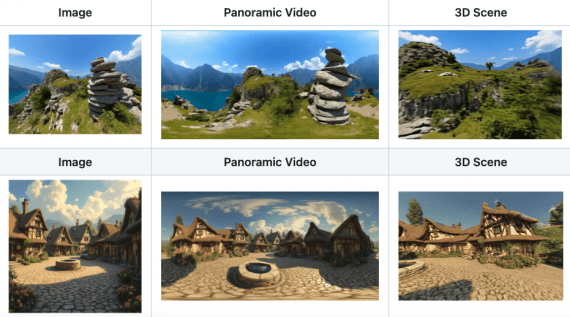

Key implementations include PDF-to-Podcast Blueprint, which extracts content from PDF documents including text, images, and tables, generates editable podcast scripts, creates audio using customizable voices or user voice samples, and enables real-time conversation with AI host. The system is powered by Mistral-Nemo-12B-Instruct and Nvidia Riva. The 3D-Guided Image Generation blueprint integrates with Blender for precise composition control, allows both manual and AI-generated 3D asset creation, uses viewport camera for scene composition, and generates images matching 3D layouts through FLUX NIM service.

These blueprints represent Nvidia’s strategy to make AI practical for creative professionals, combining traditional tools like Blender with new AI capabilities. By providing working examples of complex AI workflows, Nvidia is creating a foundation for developers to build more sophisticated applications while giving end users immediate access to AI-powered tools.

The platform launches in February, with support from every major PC manufacturer. Initial hardware support includes the new RTX 50 Series, RTX 4090/4080, and professional RTX 6000/5000 GPUs.

This could be the moment consumer AI computing goes mainstream, backed by serious hardware and user-friendly tools.