A group of researchers has joined forces to develop an open-source modular framework for facilitating work on deep neural networks for 3D point cloud data – Torch-Points3D.

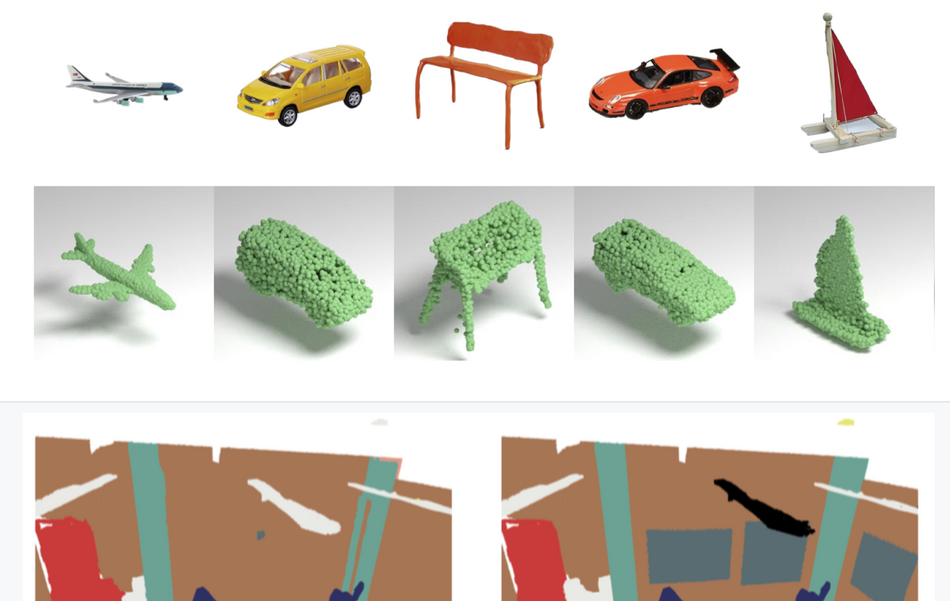

The new framework provides a common ground for working with deep networks designed for point cloud data and features an efficient implementation as well as user-friendly interfaces that are supposed to lower the entry barrier and make development faster and easier.

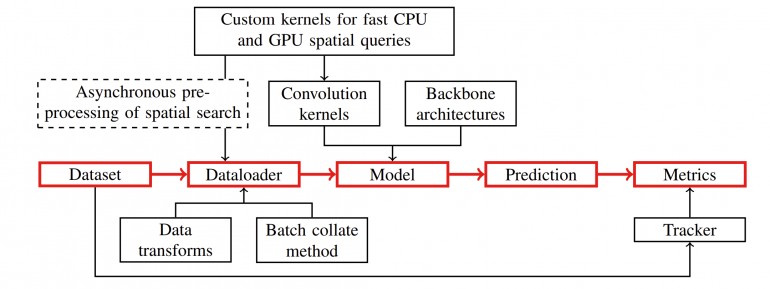

According to researchers, the novel framework differs greatly from existing frameworks for 3D point cloud data processing (such as Pytorch3D, Kaolin, Det3D etc.) and it unifies multiple tasks, multiple different models, as well as multi-datasets for reproducible 3D deep learning. It is based on Pytorch, and researchers built the framework from scratch building on top of some functionality of pytorch-geometric for 3D data manipulation. The overall architecture of Torch-Points3D is depicted in the diagram below.

As part of the framework, several existing models were included tackling a number of 3D learning tasks such as classification, segmentation, object detection, point cloud registration, completion etc. Some of the included models in the first version of the framework are PointNet, PointNet++, PointCNN, RSConv, VoteNet, PPNet, etc.

The framework also features a number of prepared and included datasets: Scannet, S3DIS, Shapenet, SemanticKitty, 3DMatch, ModelNet, Kitty Odometry, etc.

More details about the architecture, the interfaces, and how to use the framework can be found in the paper or in the Github repository.