Image editing is not a challenging task anymore. User-friendly editing software makes a process of image tampering and manipulating very straightforward, and unfortunately, tampered images are more and more often used for unscrupulous business or political purpose. And what makes things even worth in such situations, humans usually find it difficult to recognize tampered regions, even with careful inspection.

So, let’s discover how neural networks may assist people with this kind of task?

Suggested Approach

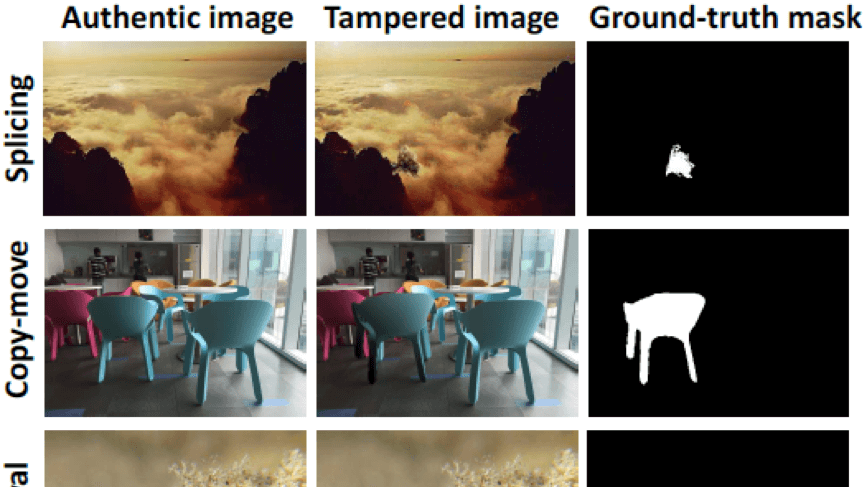

Before we dive deep into the capabilities of neural networks with regards to detection of image manipulations, let’s have a short refresh on the most common tampering techniques:

- splicing copies regions from an authentic image and pastes them into other images;

- copy-move copies and pastes regions within the same image;

- removal eliminates regions from an authentic image followed by inpainting.

Group of researchers, headed by Peng Zhou, investigate the possibility to adopt object detection networks to the problem of image detection in a way that will allow efficient detection of all three types of common manipulations.

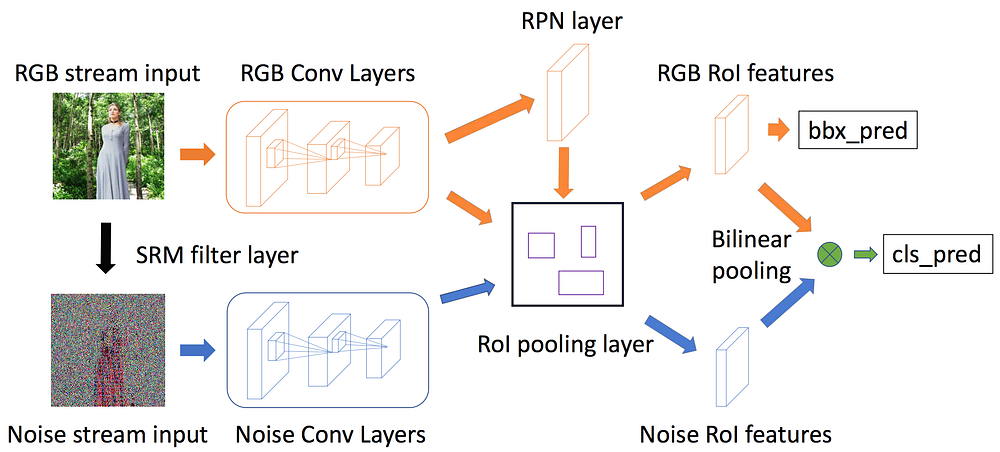

As a result, they propose a novel two-stream manipulation detection framework which explores both RGB image content and image noise features. More specifically, they adopt Faster R-CNN within a two-stream network and perform end-to-end training. The first stream utilizes features from the RGB channels to capture clues like visual inconsistencies at tampered boundaries and contrast effect between tampered and authentic regions. The second stream analyzes the local noise features in an image. These two streams are, in fact, complimentary for detecting different tampered techniques.

Network Architecture

If you are interested in the technical details of the suggested approach, this section is here just for this purpose. So, let’s take a helicopter view on the network architecture.

Network architecture

The network consists of three main parts:

1. RGB stream takes care of both bounding box regression and manipulation classification. Features from the input RGB image are learned with the ResNet 101 network and then used for manipulation classification. RPN network in the RGB stream also utilizes these features to suggest region of interest (RoI) for bounding box regression. The experiments show that RGB features perform better than noise features for the RPN network. However, this stream alone is not sufficient for some of the manipulation cases, where tampered images were post-processed to conceal splicing boundary and reduce contrast differences. That’s why the second stream was introduced.

2. Noise stream is designed to pay more attention to noise rather than semantic image content. Here the researchers utilize advances of steganalysis rich model (SRM) and use SRM filter kernels to produce noise features for their two-stream network. The resulting noise feature maps are shown in the third column of the figure below.

Illustration of tampering artifacts

Noise in this setting is modeled by the residual between a pixel’s value and the estimate of that pixel’s value produced by interpolating only the values of neighboring pixels. The noise stream shares the same RoI pooling layer as the RGB stream.

The three SRM filter kernels used to extract noise features

3. Bilinear pooling combines RGB and noise streams in a two-stream CNN network while preserving spatial information to improve the detection confidence. The output of the bilinear pooling layer is a product of RGB stream’s RoI feature and noise stream’s RoI feature. Then, the researchers apply signed square root and L₂ normalization before forwarding to the fully connected layer. They use cross-entropy loss for manipulation classification and smooth L₁ loss for bounding box regression.

Comparisons with Existing Methods

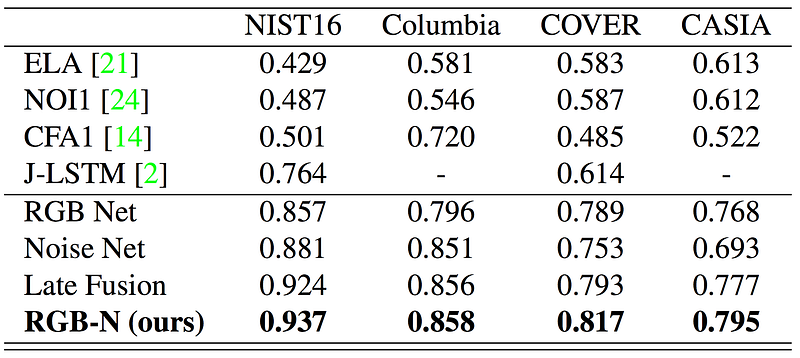

The method presented in this article was compared to other state-of-the-art methods using four different datasets: NIST16, Columbia, COVER, and CASIA. The comparison was carried out using two pixel-level evaluation metrics: F1 score and Area Under the receiver operating characteristic Curve (AUC).

The performance of the suggested model (RGB-N) was compared against several other methods (ELA, NOI1, CFA1, MFCN, and J-LSTM) as well as against RGB stream alone (RGB Net), noise stream alone (Noise Net), and the model with direct fusion combining of all detected bounding boxes for both RGB Net and Noise Net (Late fusion). See results of this comparison in the tables below.

Table 1. F1 score comparison against other methods

Table 2. Pixel level AUC comparison against other methods

As evident from the provided tables, RGB-N model outperforms such conventional methods like ELA, NOI1, and CFA1. That could be due to the fact that they all focus on specific tampering artifacts that only contain partial information for localization. MFCN was outperformed by the suggested approach for NIST15 and Columbia datasets, but not CASIA dataset. Notably, noise stream on its own performed better (based on the F1 score) than a full two-stream model for Columbia dataset. That’s because Columbia only contains uncompressed spliced regions, and hence, preserves noise differences very well.

Good news! Now you may swap your face with celebrity in one click with our brand new app SWAPP!

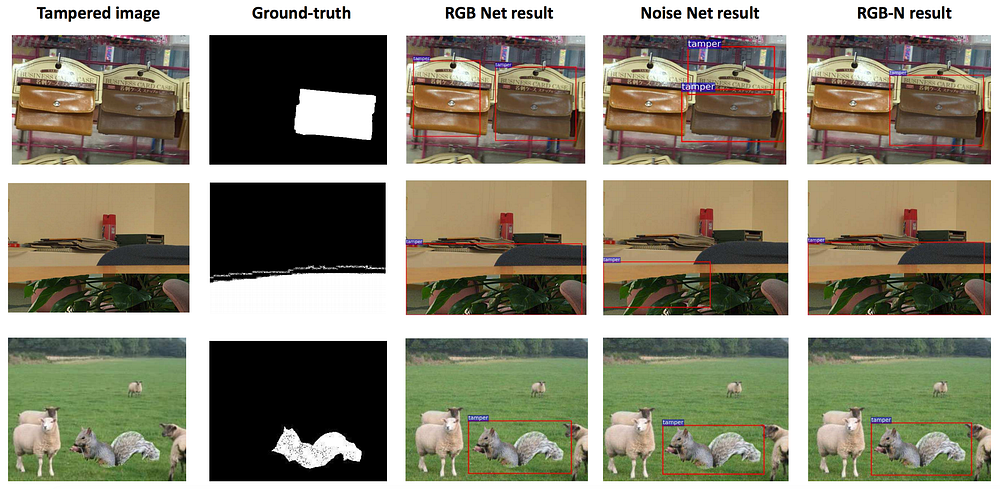

Below you can also observe some qualitative results for comparison of RGB Net, Noise Net, and RGB-N network in two-class image manipulation detection. As evident from these examples, two-stream network yields good performance even if one of the streams fails (RGB stream in the first row and noise stream in the second row).

Qualitative visualization of results

Furthermore, the network introduced here is good at detecting the exact manipulation technique used. Utilizing information provided by both RGB and noise map it can distinguish between splicing, copy-move, and removal tampering techniques. Some examples are provided below.

Qualitative results for the multi-class image manipulation detection

Bottom Line

This novel approach to image manipulation detection outperforms all conventional methods. Such a high performance is achieved by combining two different streams (RGB and noise) to learn rich features for image manipulation detection. Apparently, the two streams have the complementary contribution in finding tampered regions. Noise features, extracted by an SRM filter, enable the model to capture noise inconsistency between tampered and authentic regions, which is extremely important when dealing with splicing and removal tampering techniques.

In addition, the model is also good at distinguishing between various tampering techniques. So, it tells not only, which region was manipulated, but also how it was manipulated: was some object inserted, removed or copy-moved? You’ll get the answer.