ArtAug: Open Source Framework for Image Generation Models Enhancement

18 December 2024

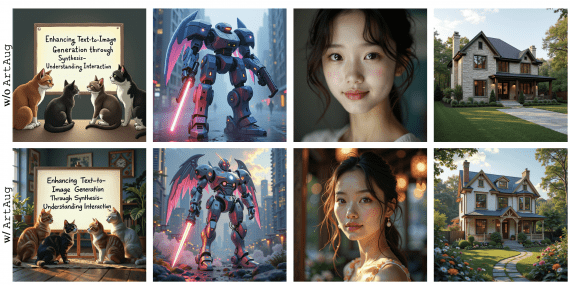

ArtAug: Open Source Framework for Image Generation Models Enhancement

East China Normal University and Alibaba Group researchers have introduced ArtAug, a framework that enhances text-to-image generation through synthesis-understanding interaction. This approach significantly improves image quality without requiring extensive manual…