OpenAI has introduced a new version of the DALL-E text-to-image conversion model. Compared to the first version, DALL-E 2 generates images in higher quality with less delay, and also allows you to edit existing images.

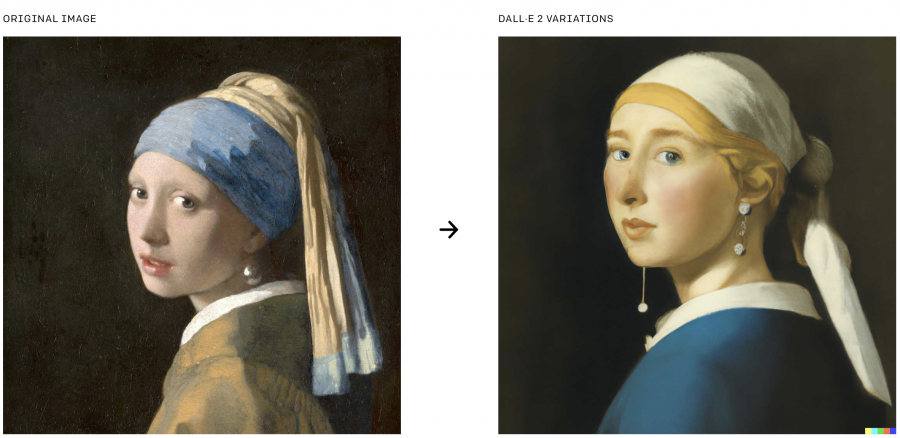

One of the new features of DALL-E 2, inpainting, allows the user to select an area on an existing image and request the model to edit it. For example, you can replace one painting hanging on the wall with another, or add a vase of flowers to a coffee table. At the same time, the model takes into account the illumination conditions of objects. Another function, variations, generates images similar to the original one.

DALL-E 2 generates images with a resolution of 1024 x 1024 pixels, which is 16 times higher than the resolution in the previous version of the model. The model is based on CLIP, a computer vision system that OpenAI also announced last year.

The full DALL-E model has not been published in the public domain, but third-party developers have improved their own tools over the past year that mimic some of its functions. One of the most popular main applications is Wombo’s Dream mobile application, which generates images according to the description in various artistic styles.

When developing the new version, OpenAI has implemented built-in security features. In particular, the model was trained on data in which unwanted materials were screened out. In the near future, OpenAI plans to make the model available for use in third-party applications.