Flamingo is a multimodal DeepMind model that generates a text description of photos, videos and sounds. The model surpasses the previous state-of-the-art models in 16 tasks, and its feature is the ability to learn from several examples.

Usually, in order for a visual model to master a new task, it must be trained on tens of thousands of examples specially marked up for this task. If the goal is to count and identify the animals in an image, it would be necessary to collect thousands of images of animals indicating their number and type. This process is inefficient, expensive and resource-intensive, requires large amounts of marked-up data and the need to train a new model every time it faces a new task.

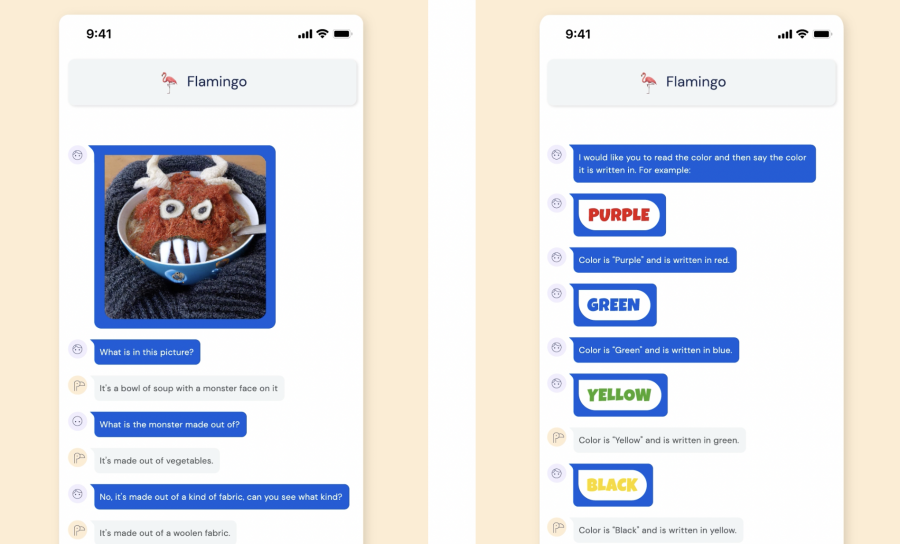

Flamingo – few-shot is a model that solves this problem in a wide range of multimodal tasks. Based on several examples of pairs of visual input data and expected text responses, the model can be asked a question with a new image or video, and then generate an answer.

In 16 tasks with 4 pairs of examples on which Flamingo was tested, all previous state-of-the-art approaches were used. Flamingo combines large language models, visual representations (each of which has been pre-trained), and architectural components developed in DeepMind between them. The model is then trained on a mixture of additional unmarked large-scale multimodal data from the Internet.