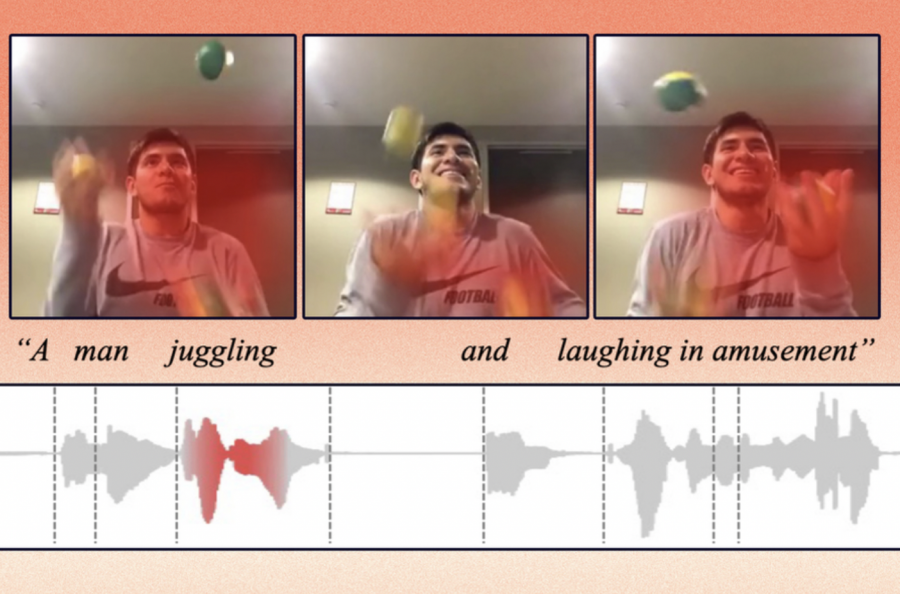

MIT has developed a model of cross-modal search for actions in text, audio and video content. The model allows you to determine where a certain action takes place in the video and identify it.

The algorithm is trained to represent data in such a way as to capture concepts that are common to visual, audio, or text modalities. For example, their method allows you to find out that the crying child in the video is associated with the spoken word “crying” in the audio recording.

The model was tested in cross-modal search tasks on three pairs of datasets: a dataset with video recordings and text captions to them, a dataset of voiced videos, and a dataset with one frame from the video and its full audio file.

The technology is planned to be used to train robots to recognize concepts in a similar way to how humans do it. The peculiarity of the model arising from its idea – the allocation of common links in cross-modal content – is the interpretability of its results, which is important for robotics tasks.