Image colorization is a widespread problem within computer vision. The ultimate objective of image colorization is to map a gray-scale image to a visually plausible and perceptually meaningful color image.

It is important to mention that image colorization is an ill-posed problem. The definition itself says that the goal is obtaining “visually plausible and realistic image colorization,” which is conditioned as a result of the multi-modal nature of the problem – various colorizations are possible for a gray-scale image.

Image decolorization (color-to-grayscale) is non-invertible image degradation, and starting from this point it is clear that multiple meaningful and plausible results are possible for a single input image. Moreover, the expected “visually plausible” result is subjective and differs pretty much from different people.

Previous works

However, in the past researchers have proposed some solutions to the ill-conditioned problem of realistic image colorization. As I mentioned multiple times, the result highly subjective and in many of the proposed methods, human intervention was included to obtain better colorization results. In the past few years, several colorization approaches exploiting the power of deep learning have been proposed. In this way the colorization is learned from large amounts of data, hoping for an improved generalization in the process of colonization.

More recently, the new approaches have examined the trade-off between controllability from interaction and robustness from learning.

In novel approach researchers from Microsoft Research Asia, introduce the first deep learning approach for exemplar-based local colorization.

State-of-the-art idea

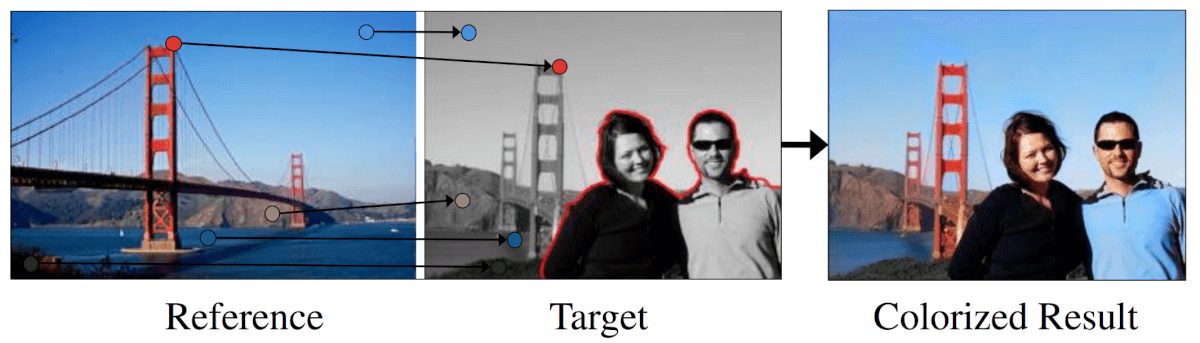

The idea of the proposed approach is to introduce a reference color image, besides the input gray-scale image to the method that will output plausible colorization. Given a reference color image, potentially semantically similar to the input image, a convolutional neural network maps a gray-scale image to an output colorized image in an end-to-end manner.

Method

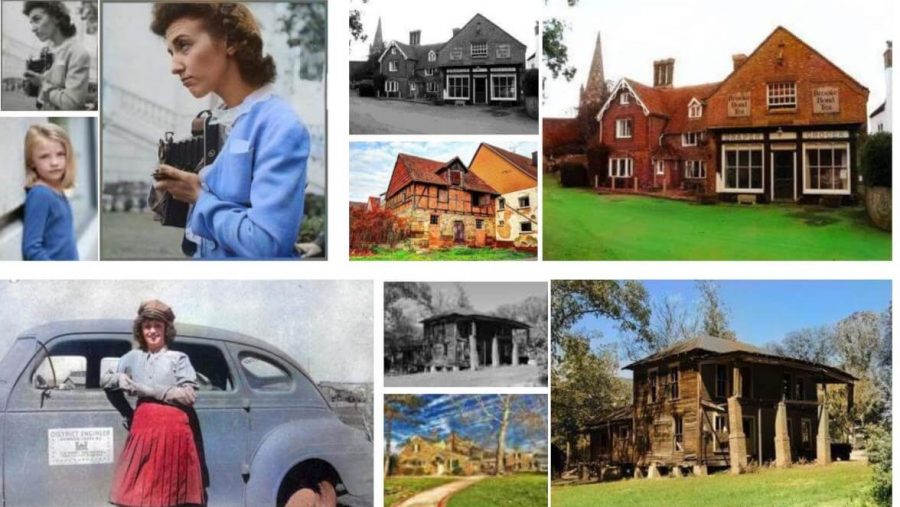

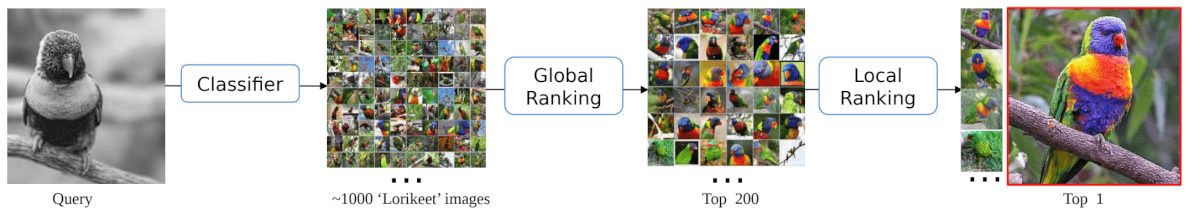

Besides the proposed, first deep learning based exemplar colorization method, in their paper the researchers provide a few more contributions: A reference image retrieval algorithm for reference recommendation, with which a fully automatic colorization can also be obtained, a method capable of transferability to unnatural images and an extension to video colorization.

The main contribution: the colorization method is, in fact, able to colorize an image according to a given semantically “similar” reference image. This image is given a user’s input, or it can be obtained with the image retrieval system given as the second contribution. This system is trying to find semantically similar image to the input image to reuse the local color patches and provide more realistic and plausible output colorization.

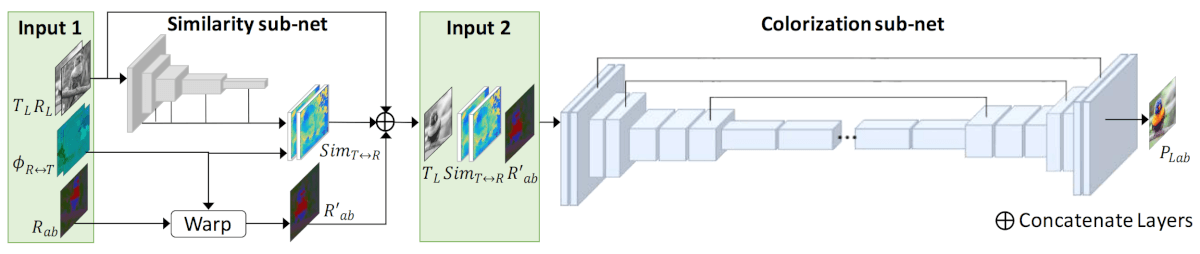

The colorization method employs two deep convolutional neural networks: similarity sub-network and colorization sub-network. The first network is a pre-processing network that measures the semantic similarity between the reference and the target using a VGG-19 network. The VGG network was pre-trained on gray-scale image object recognition task. This network gives the input to the colorization network, and it provides a robust and more meaningful similarity metric.

The second network – the colorization sub-network is an end-to-end Convolutional Neural Network that can learn selection, propagation, and prediction of colors simultaneously. This network takes as input the output of the pre-processing done with the similarity sub-network as well as the input grayscale image. More precisely, the data to this network is the target gray-scale image, the aligned reference image and bidirectional similarity maps (between the input and the reference image).

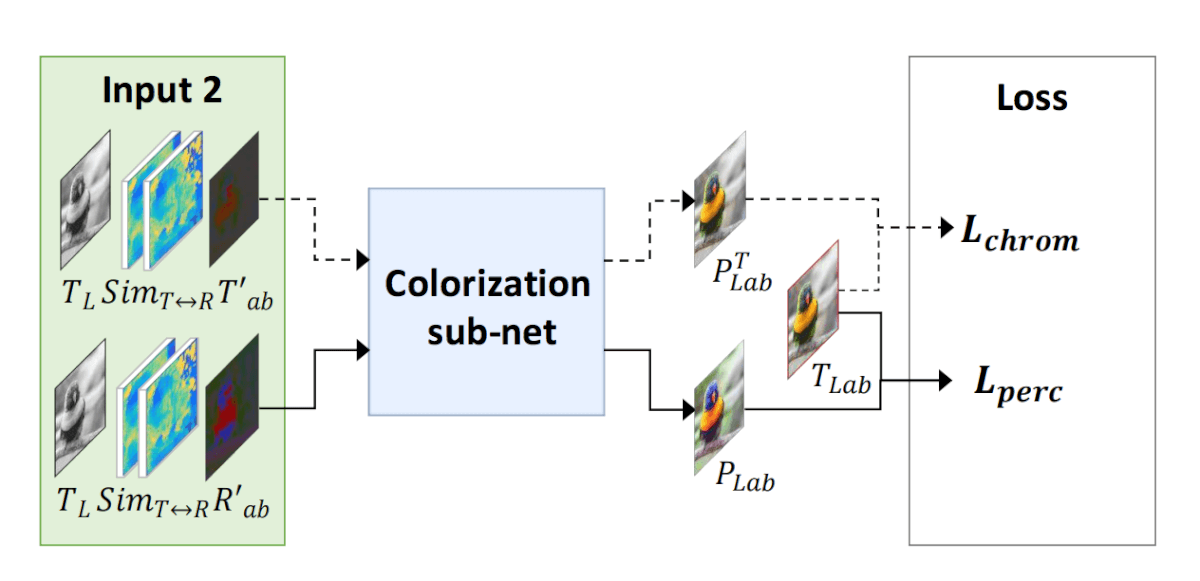

To control both: true reference colors (from the reference image or similar) to be used and natural colorization when no reliable reference color is available, the authors propose a branch learning scheme for the colorization network. This multi-task network involves two branches, Chrominance branch, and Perceptual branch. The same network is used and trained while taking different input depending on the branch as well as different loss function (again depending on which branch is used).

In the Chrominance branch, the network learns to selectively propagate the correct reference colors, which depends on how well the target and the reference are matched. While this network is trying to satisfy the chrominance consistency the other branch through “Perceptual loss,” enforces a close match between the result and the exact color image of high-level feature representations. Both of the networks are shown in the picture below.

Comparison with other state-of-the-art

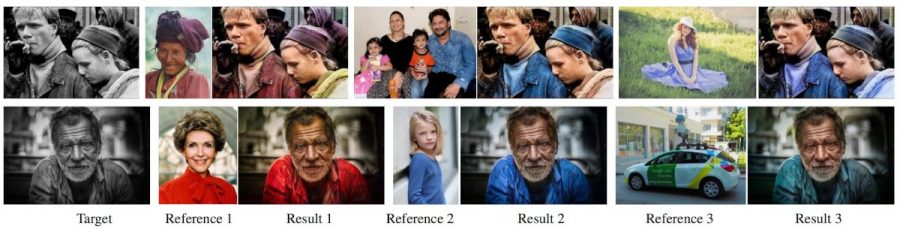

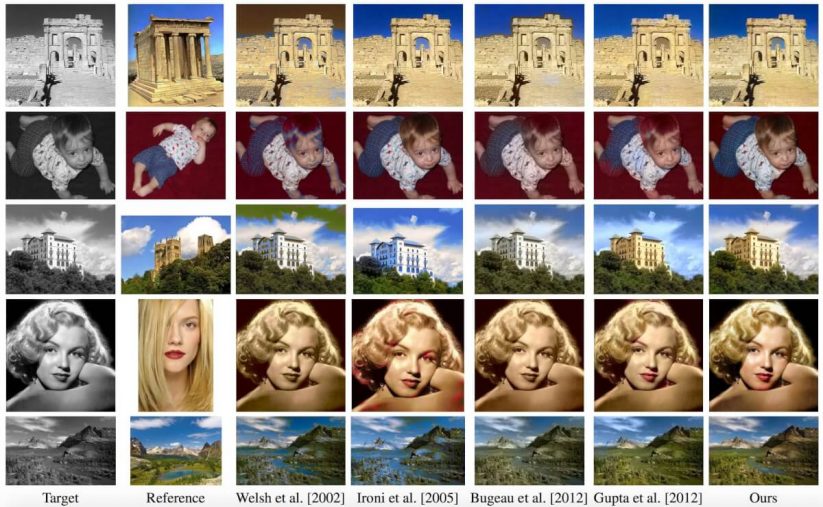

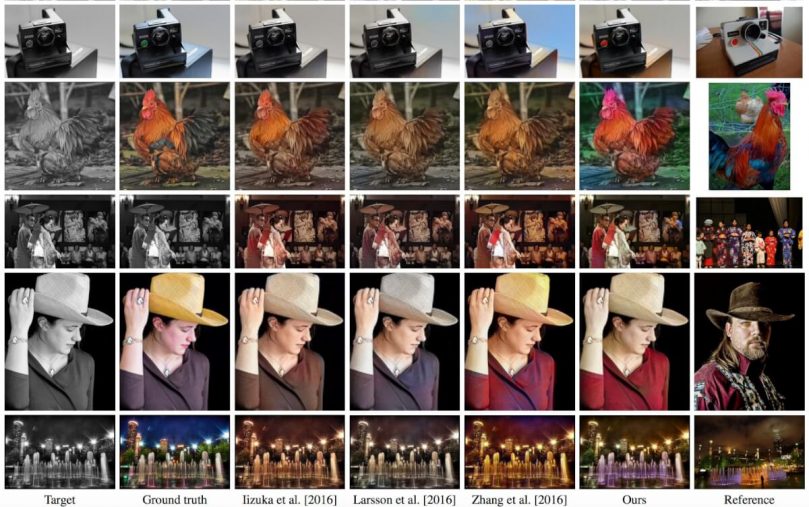

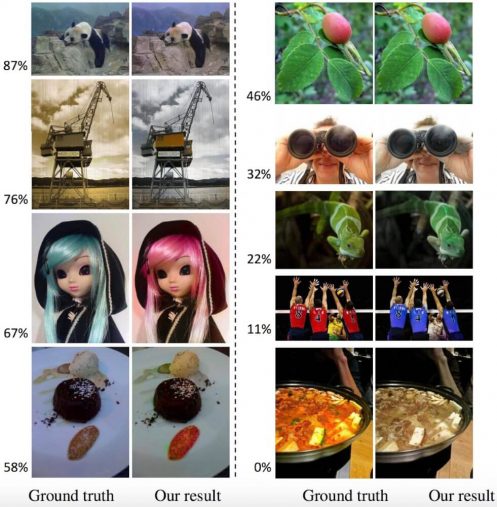

The proposed method gives more than satisfactory results in realistic image colorization. The evaluation is divided into few groups: comparison with exemplar-based methods, comparison with learning based methods and comparison with interactive methods.

To compare against exemplar-based methods, the authors collected around 35 image pairs from all the papers of the comparison methods and compared quantitatively and qualitatively the results. They show that this method outperforms other existing exemplar-based methods yielding better visual results. They argue that the success comes from the sophisticated mechanism of color sample selection and propagation that are jointly learned from data rather than through heuristics.

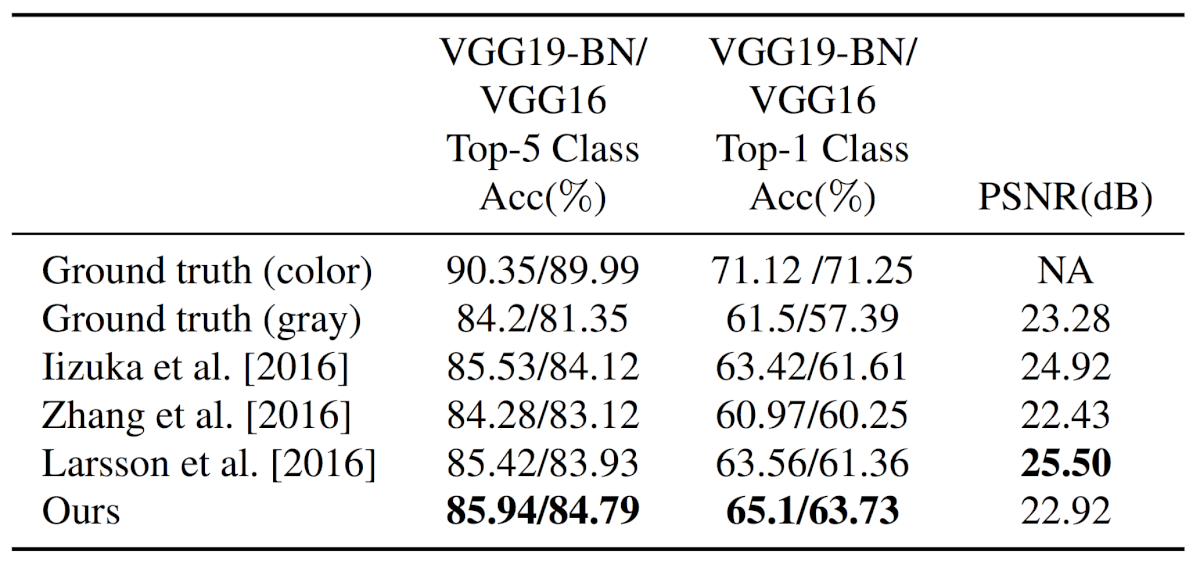

By sending the colorized results into VGG19 or VGG16 pre-trained on image recognition task the authors try to measure the indication of more or less natural generated images and compare against other learning-based methods. Using this evaluation, they show that the method outperforms the existing methods. However, some of the ways yield better PSNR than the proposed method.

Conclusion

In this novel colorization approach, the authors show the power of deep learning again to solve an important although ill-conditioned problem. They try to leverage the flexibility and potential of deep convolutional neural networks and provide a robust and controllable image colorization method. They also offer a whole system for automatic image colorization based on most-similar reference image that is also extended to unnatural images as well as videos. In conclusion, a whole deployable system is proposed incorporating innovative deep-learning based method for colorizing gray-scale images in a robust, and realistic way.