TransGAN is a GAN model in which the generator and discriminator are composed of two Transformer architectures. GAN architecture traditionally uses convolutions. In TransGAN, convolutions are replaced with Transformer. The project code is available in the open repository on GitHub.

Transformer for computer vision tasks

The success of using Transformer architectures in NLP stimulates interest in implementing them to CV tasks such as classification, recognition, and segmentation. Researchers test whether Transformer can replace the GAN architecture in computer vision tasks.

More about the model

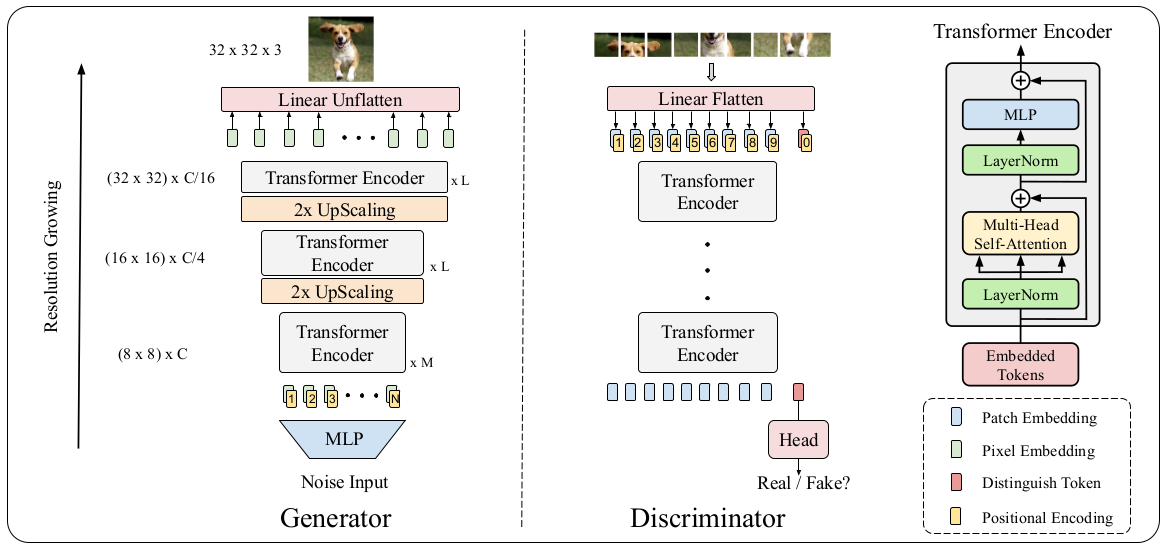

Researchers have developed a GAN that does not use convolutions and is based solely on Transformer architectures. Basic TransGAN consists of the following parts:

- A generator that progressively improves feature resolution and at the same time reduces the size of embeddings;

- A discriminator that works on parts of an image.

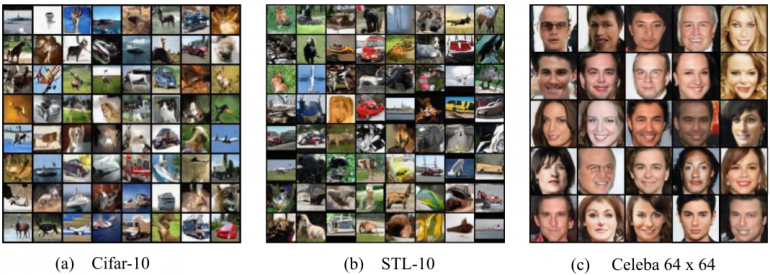

Both parts of the model are based on the Transformer. Based on the results of experiments, the model produces results comparable to state-of-the-art GAN-models. On the STL-10 dataset, TransGAN bypasses previous models with IS at 10.10 and FID at 25.32.