Google AI neural network simulates camera movement

3 March 2021

Google AI neural network simulates camera movement

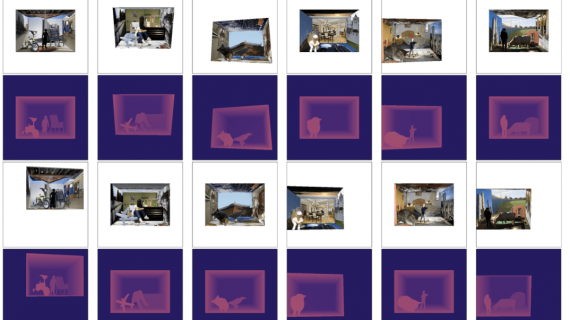

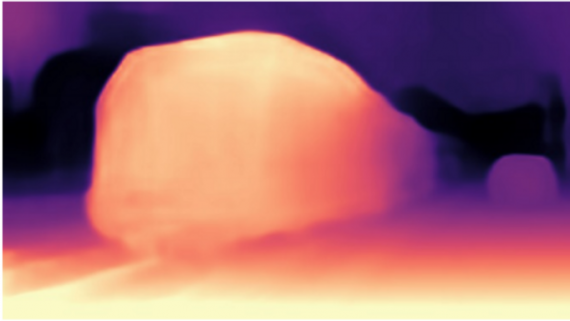

Google AI neural network simulates camera movement and parallax for photos. The Cinematic photos system is used in the Google Photos app. Image Depth Estimation Along with the latest photography…