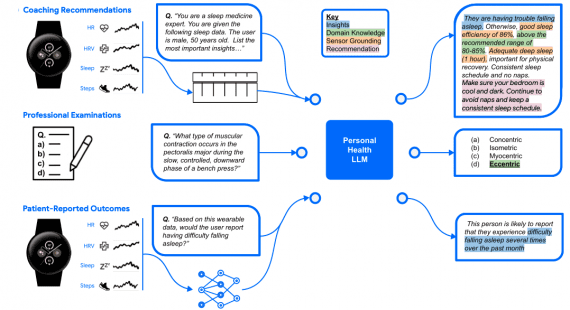

Google AI neural network simulates camera movement and parallax for photos. The Cinematic photos system is used in the Google Photos app.

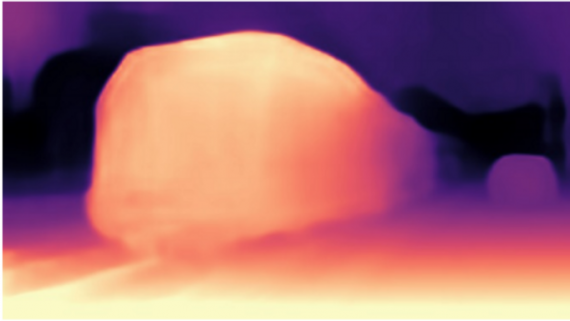

Image Depth Estimation

Along with the latest photography features like Portrait Mode and Augmented Reality (AR), Cinematic photos require a depth map to get a sense of the 3D structure of a scene. On Pixel phones, a scene is captured using two cameras or two-pixel sensors. This allows you to get the depth of the scene through different camera angles and a known distance between the cameras. To make Cinematic photos available for photos that were not captured on Pixel, the developers trained a convolutional neural network with an encoder-decoder architecture. The neural network predicts the depth map from one RGB image. Segmentation masks for people in the image are obtained using the DeepLab model, which was trained on the Open Images dataset.

Camera Trajectory

To reconstruct a scene in 3D, an RGB image is superimposed on a depth map to create a mesh. This approach can lead to artifacts. To make the system resistant to artifacts, the developers use padded segmentation masks from the human pose estimation model. The masks are divided into three parts: head, body, and background.