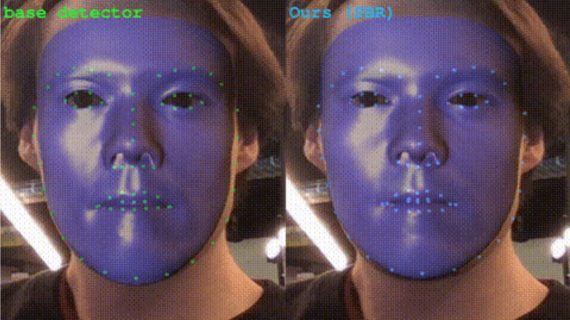

Supervision-by-Registration: An Unsupervised Approach to Improve the Precision of Facial Landmark Detectors

14 August 2018

Supervision-by-Registration: An Unsupervised Approach to Improve the Precision of Facial Landmark Detectors

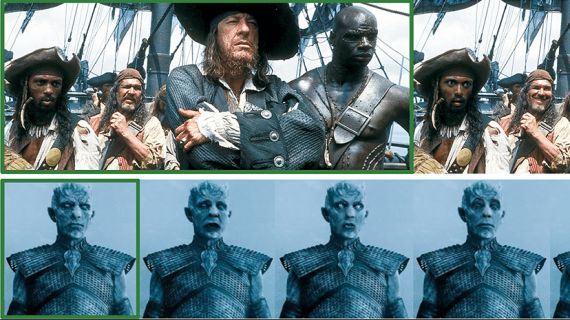

Precise facial landmark detection lays the foundation for a high-quality performance of many computer vision and computer graphics tasks, such as face recognition, face animation and face reenactment. Many face…