Spherical CNN Kernels for 3D Point Clouds

30 May 2018

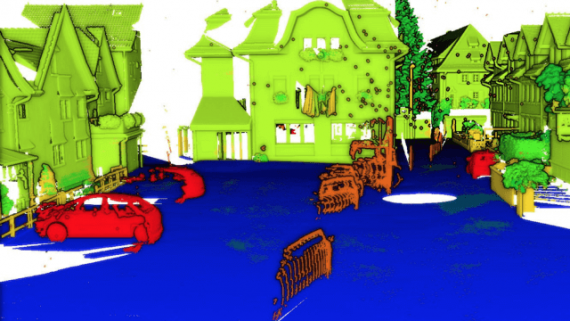

Spherical CNN Kernels for 3D Point Clouds

From a data structure point of view, point clouds are unordered sets of vectors. They are specific and differ very much from other data types such as images and videos.…