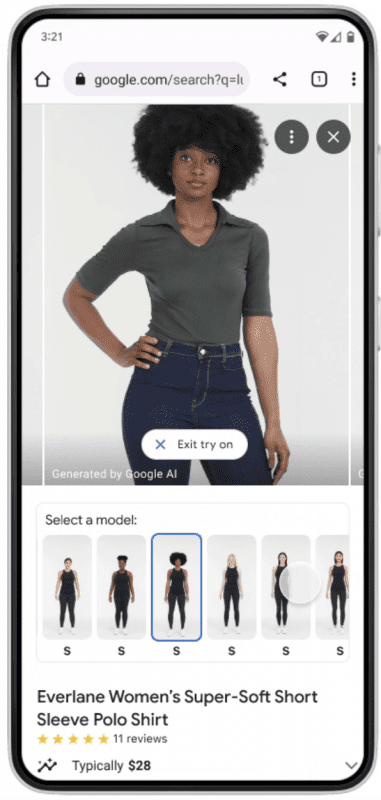

Google has introduced Try-on, a diffusion model that allows users of the “Shopping” service to try on clothes on models with different body types and skin tones. With just one click, the model dynamically changes the clothes, realistically replicating the draping, fit, stretching, and fabric folds.

While clothing is one of the most popular categories for online purchases, fitting issues contribute to a high percentage of returns. In fact, 59% of buyers feel dissatisfied with their purchased items because they don’t look as expected. Most virtual fitting tools on the market create avatars using geometric deformation, which often leads to errors, such as creating unnecessary wrinkles.

More About the Model

To address this problem, Google has developed a diffusion model trained on data from the “Shopping” service, specifically images of people with different body types and skin tones. The training process involved millions of image pairs, each featuring a person wearing the same clothing but in two different poses. The input data for the model includes the clothing item and the model’s image.

The feature is already available in the United States. When a user, browsing for a product, clicks the try-on button, they can select a model with a similar body shape and size and see if the clothes suit them. Try-on enables an accurate reproduction of how the clothes will drape, fold, fit, stretch, and create folds on specific models. The available models cover sizes from XXS to 4XL.

Currently, the feature is only accessible for products from a few major brands. However, Google plans to expand it to all products in the near future, including the addition of male models.