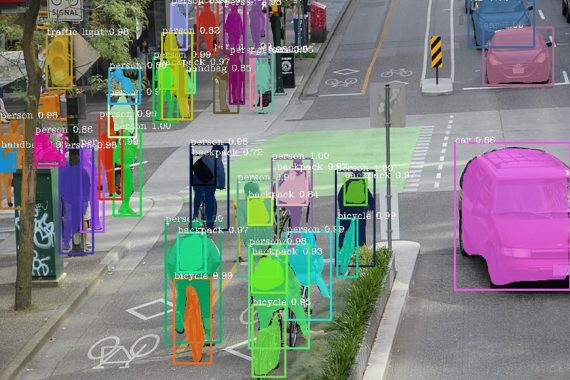

Google has introduced MobileDiffusion, a real-time text-to-image generation model that operates entirely on mobile devices. On Android and iOS devices with the latest generation processors, image generation at a resolution of 512×512 pixels takes less than half a second.

Leading text-to-image models such as Stable Diffusion, DALL-E, and Imagen have billions of parameters and hence are costly to operate, requiring powerful desktop computers or servers. Although solutions working on mobile devices were introduced in 2023 (using MediaPipe on Android and Core ML on iOS), real-time image generation on mobile devices – with a delay of less than one second – remained out of reach.

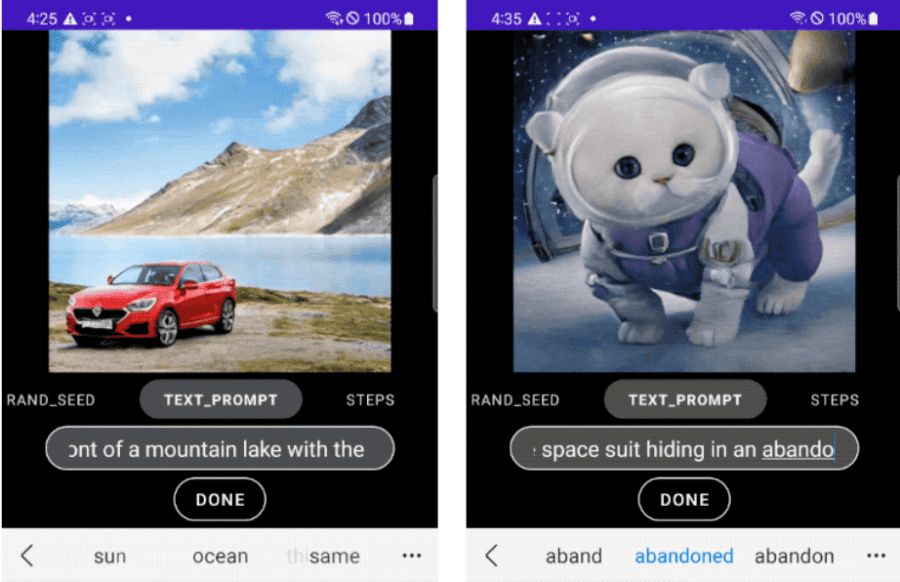

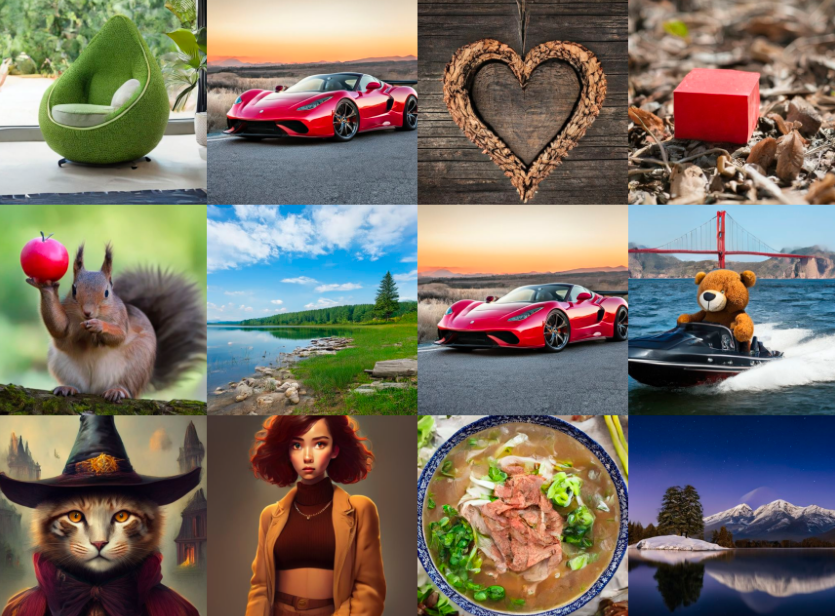

MobileDiffusion is an efficient hidden diffusion model specifically designed for mobile devices. Examples of images generated by MobileDiffusion take less than half a second:

The relative inefficiency of text-to-image conversion models is due to two reasons. Firstly, diffusion models inherently involve iteratively reducing noise levels, requiring multiple calls to the model. Secondly, the complexity of text-to-image model architecture implies a significant number of parameters, numbering in the billions, leading to high computational complexity. In MobileDiffusion, both the number of iterations and the number of parameters are optimized: image generation is achieved through a single sampling iteration using DiffusionGAN, and the relatively small model size of 520 million parameters allows its usage on mobile devices.

Researchers achieved the highest image generation speed on the iPhone 15 Pro. A detailed description of the model architecture and examples of its real-time operation are available at this link.