Deep learning has been applied to a huge number of computer vision tasks and so far it has proven successful in many of them. Nevertheless, there are still some tasks where deep neural networks struggle and traditional computer vision approaches work better. Historically speaking, some of the tasks have been more appealing and therefore well studied and explored whereas others have attracted much less attention. Image outpainting (or image extrapolation) is one of the latter. While filling holes, or filling missing details in images i.e. image inpainting has been widely studied, image outpainting has been addressed only in a few studies and it is not a very popular topic among researchers.

However, researchers from Stanford have presented a deep learning approach towards the problem of image extrapolation (i.e. image outpainting). They take an interesting approach and address the problem of image extrapolation using adversarial learning.

Generative adversarial learning – DCGAN

Generative adversarial learning has received a lot of attention in the past few years and it has been applied to a variety of generative tasks. In this work, the researchers use Generative Adversarial Networks to outpaint an image by extrapolating and filling equal-sized parts on the sides of the input image.

As in many generative tasks in computer vision, the goal is to produce a realistic (and visually pleasing) image. The outpainting can be seen as hallucinating past the image boundaries and intuitively it is not a trivial task since (almost) anything might appear outside the boundaries of the image in reality. Therefore, a significant amount of additional content is needed which matches the original image, especially near its boundaries. While generating realistic content near the image boundaries is challenging because it has to match the original image, the generation of realistic content further from the boundaries is almost as challenging but mostly because of the opposite – lack of neighboring information.

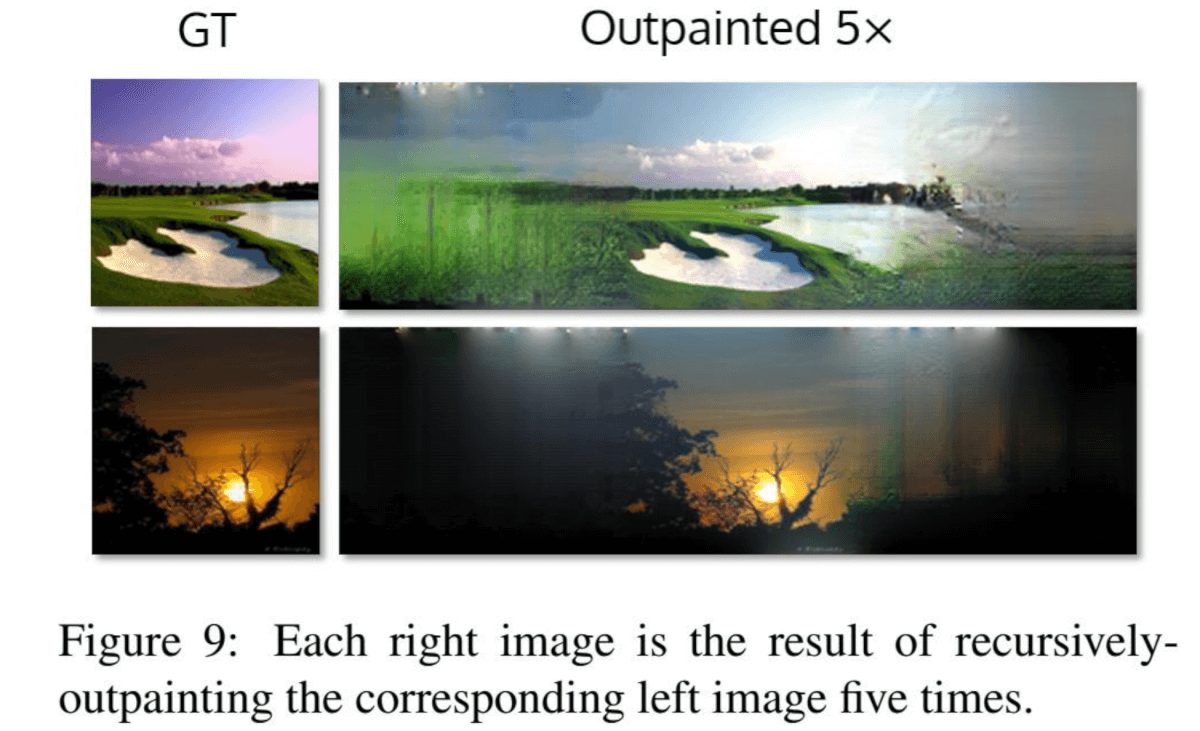

In this work, a DCGAN architecture has been employed to tackle the problem of image extrapolating. The authors show that their method is able to generate realistic samples of 128×128 color images, and moreover, it allows recursive outpainting (up to some extent) to obtain larger images.

Data

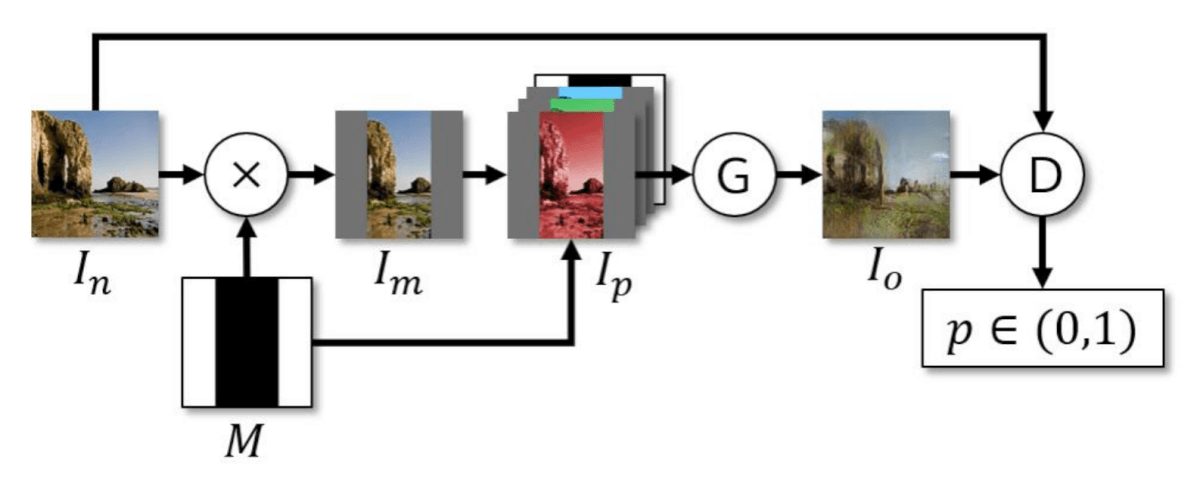

The Places365 Dataset has been used to both train and evaluates the proposed method. The authors define a specific preprocessing which consists of three steps: normalizing the images, defining a binary mask to mask out the central part of the image (horizontally only) and compute the mean pixel intensity over the unmasked regions. After the preprocessing, each input images is represented as a pair of two images: the original image and the preprocessed image. The preprocessed image is obtained by masking the original image and concatenating with the mean pixel intensity images (per channel).

Method

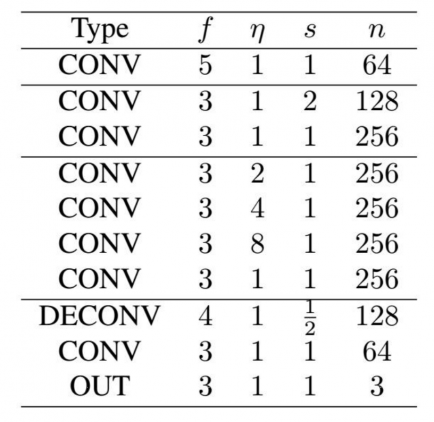

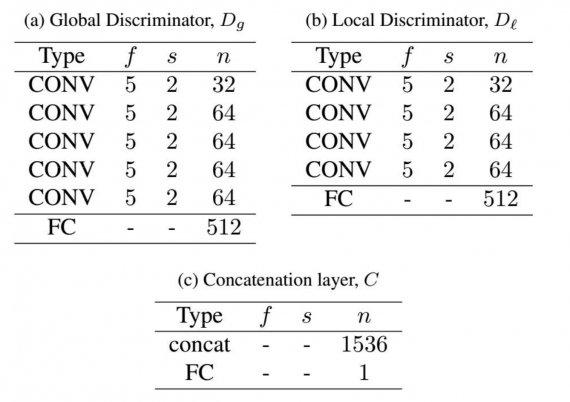

As mentioned before, the generative model is a GAN network which is trained using a three-phase training procedure to account for stability in the training process. The generator network is a non-symmetric Convolutional Encoder-Decoder network, and the Discriminator accounts for global and local discriminators. The generator networks have 9 layers (8 convolutional and 1 deconvolutional layer), while the discriminator has 5 convolutional and 1 fully-connected layer, plus a concatenation layer that combines the outputs of the local discriminators to produce a single output.

All the layers are followed by ReLU activation except the output layers in both networks and dilated convolutions are used to further improve the outpainting. The authors argue that dilated convolutions actually affect a lot the quality of the generated image and the actual capability of outpainting the image. In fact, the improvement comes from the increased local receptive field that enables to outpaint the whole image and dilated convolutions are just an efficient way to increase the local receptive field in convolutional layers without increasing the computational complexity.

Evaluation and conclusions

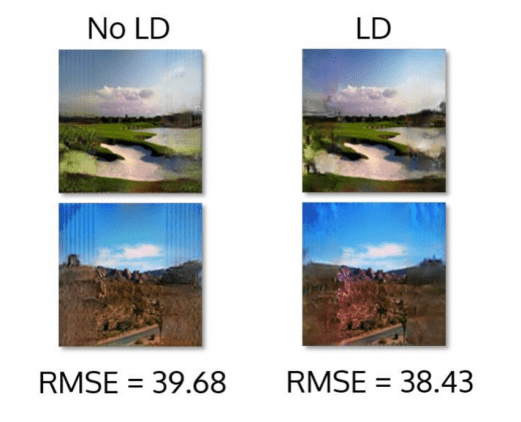

This approach shows promising results as it has proved that it is able to generate a relatively realistic image outpaintings. The authors evaluated the method mostly qualitatively as a consequence of the nature of the problem, and they also use RMSE as a reference quantitative evaluation metric. In fact, they use a modified RMSE where they account for simple image postprocessing by renormalizing the images. In the final part of the paper, they explain the recursive outpainting experiments that they conducted and they show that the recursively-outpainted images remain relatively realistic even though noise compounds with successive iterations. A recursively-outpainted image with 5 iterations is given as an example in the image below.