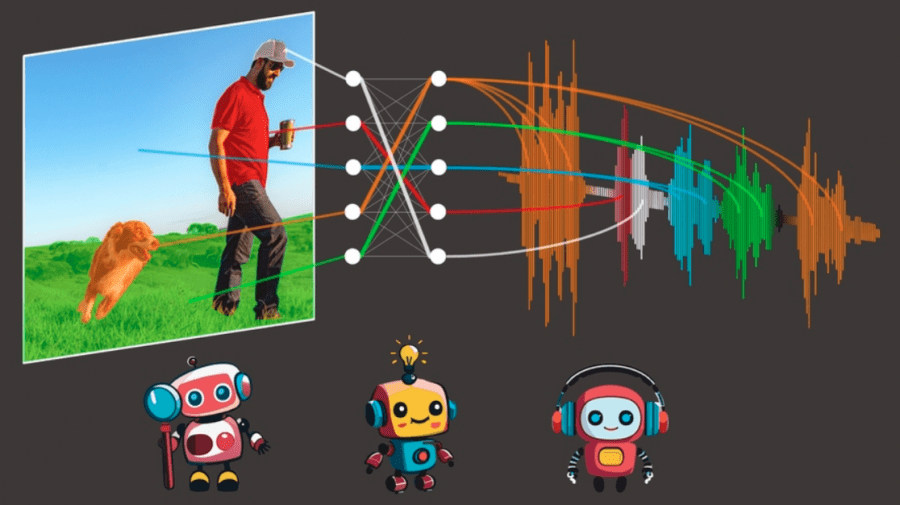

The algorithm DenseAV, developed at MIT, learns to understand the meaning of words and sentences by watching videos of people conversing. DenseAV outperformed other algorithms in tasks involving identifying objects by their names.

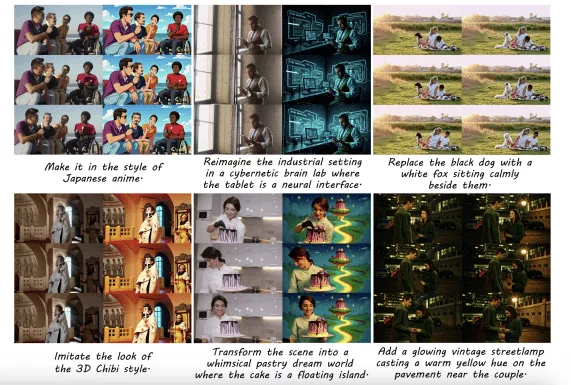

Researchers solved the problem of language learning without text input. In other words, their approach mirrors how children learn to understand language by observing the world around them. DenseAV predicts what a person sees based on what they hear and vice versa. For example, if someone hears the phrase “bake the cake at 350 degrees,” they are likely seeing a cake or an oven.

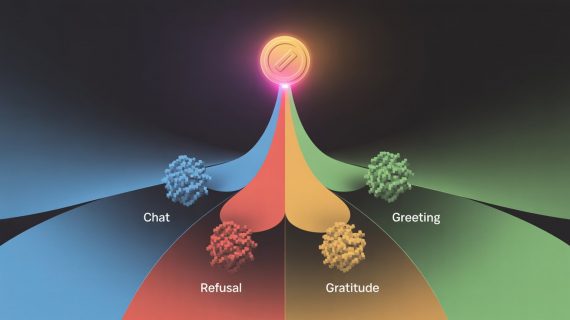

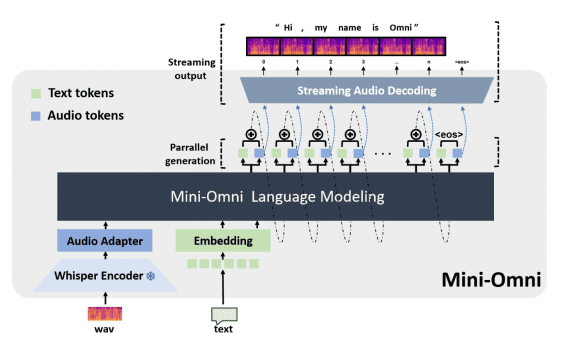

DenseAV uses two components to separately process audio and visual data. This design enables the algorithm to recognize objects and generate detailed and meaningful features for both audio and visual signals. DenseAV learns by comparing and merging all possible matches between an audio clip and image pixels.

The algorithm trained on the AudioSet dataset, which includes 2 million YouTube videos. Researchers also created new datasets to test how well the model can link sounds and images. In these tests, DenseAV outperformed existing state-of-the-art models.

A key application of this algorithm is training models based on the vast amount of video content available online. Another potential application is studying animal languages, such as those of dolphins or whales, which do not have written forms of communication. Additionally, the method could identify patterns between other signal pairs, such as seismic signals and corresponding geological processes.