Hailuo AI Expands Video Creation Capabilities with Image-to-Video Feature

9 October 2024

Hailuo AI Expands Video Creation Capabilities with Image-to-Video Feature

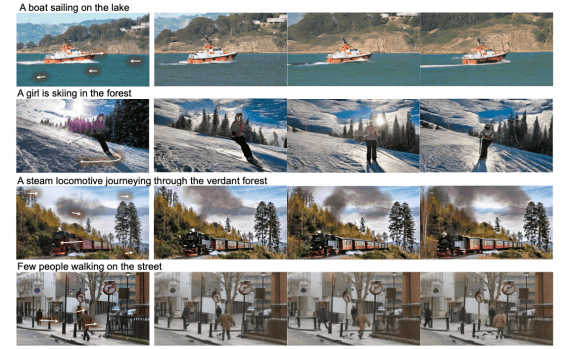

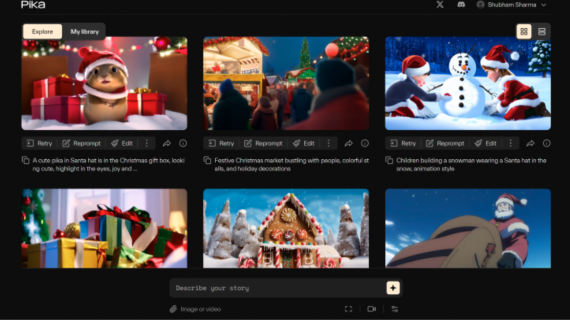

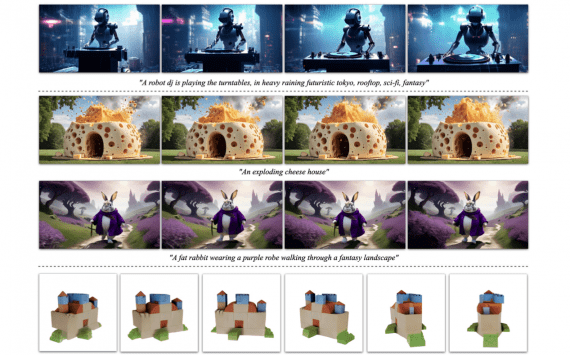

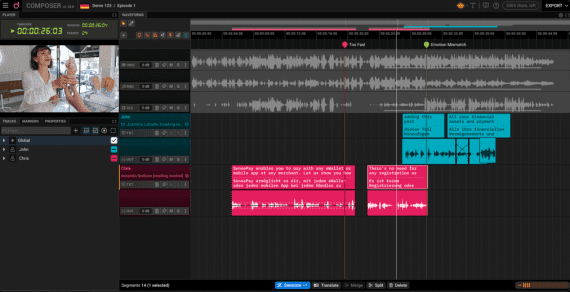

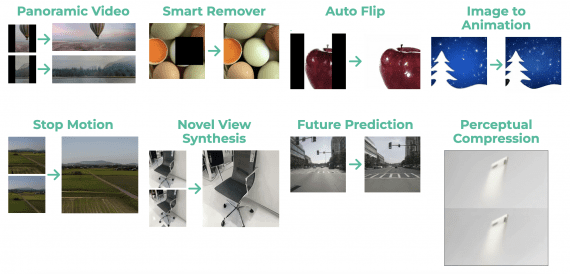

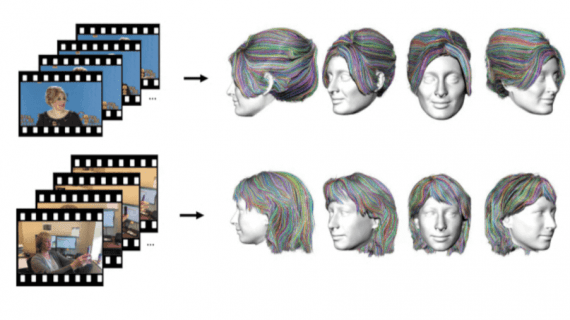

MiniMax’s Hailuo AI has launched its new Image-to-Video feature, empowering creators to transform static images into dynamic video content. This update enhances the platform, which initially supported only text-to-video generation…