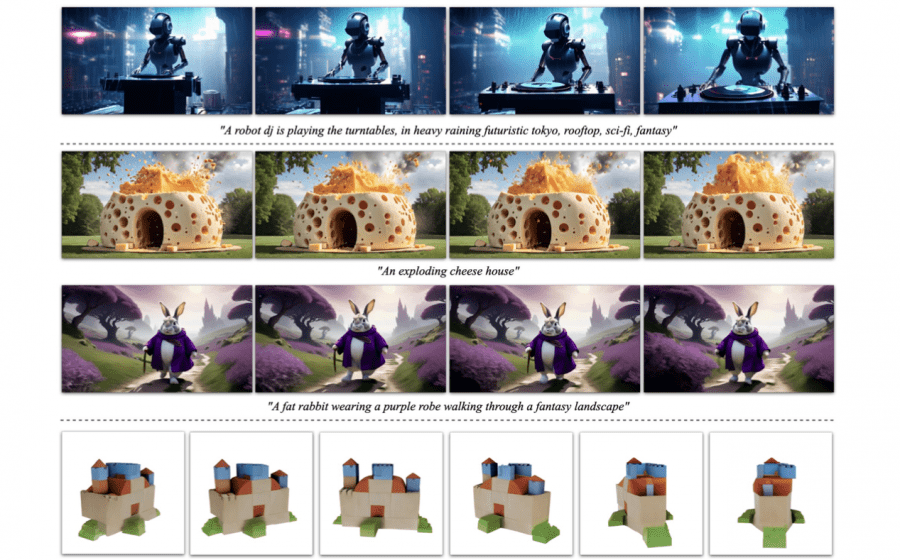

Stability AI has announced the release of Stable Video Diffusion, a duo of models that generate up to 4-second videos from an input image. Both models are available publicly. Importantly, this release marks a significant step in video generation technology.

Users can only use the SVD and SVD-XT models for research purposes. Following user feedback collection, Stability AI plans to refine them for commercial use. Thus, this initiative opens new avenues for video content creation.

SVD and SVD-XT, latent diffusion models, use a static image as the first frame and generate a video of 576×1024 resolution. Both models produce content at a rate of three to 30 frames per second. Specifically, the SVD model was trained to create 14 frames from an image, while SVD-XT generates 25 frames. Therefore, these models demonstrate remarkable versatility in video generation.

For training, a dataset of 600 million publicly available videos was used, and a smaller, higher-quality dataset of 1 million videos for precise frame sequence prediction tuning. Consequently, this comprehensive training enhances the models’ effectiveness.

According to an external survey, SVD’s output surpasses leading closed text-to-video transformation models by Runway and Pika Labs. Stability AI targets advertising, education, and entertainment as primary applications for Stable Video Diffusion. The company’s future plans include adding text query support, expanding the model’s capabilities.

The code for these models is available on GitHub, and their weights can be found on Hugging Face.