Google DeepMind has introduced the generative model Veo, capable of creating videos exceeding 60 seconds in Full HD resolution. Besides textual queries, the model can take input from images and videos.

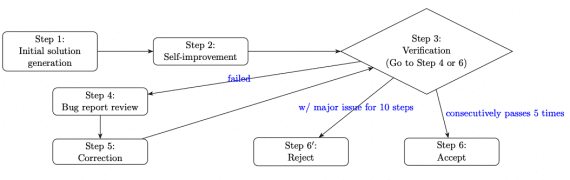

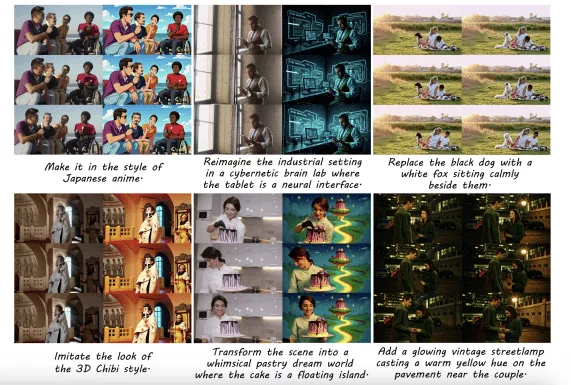

A key feature of Google’s VEO is its ability to generate videos in any cinematic style and accurately reproduce query content. For instance, the model considers terms like “photorealism,” “surrealism,” “aerial photography,” “timelapse,” etc. It operates in three modes: text2video, image2video, and video2video. In video2video mode, Veo can edit previously created videos, also in any style, using textual queries.

Similar to the OpenAI Sora model, Veo addresses one of the major issues in text2video models — inaccurate rendering of objects not consistently present in the frame. All Veo videos embed a watermark called SynthID, ensuring the ability to verify videos for synthetic generation and protect individuals depicted in videos from deepfakes. The model is a result of integrating DeepMind developments such as Generative Query Network (GQN), DVD-GAN, Imagen-Video, Phenaki, WALT, VideoPoet, and Lumiere.

As with OpenAI’s Sora, Google has not provided access to the model. To try it out, you can join the waitlist on VideoFX. In the future, some Veo features will become available when creating YouTube Shorts and in other company products.